Reproducibility in ML: why it matters and how to achieve it

May 25, 2018

Reproducing results across machine learning experiments is painstaking work, and in some cases, even impossible. In this post, we detail why reproducibility matters, what exactly makes it so hard, and what we at Determined AI are doing about it.

Reproducibility is critical to industrial grade model development. Without it, data scientists risk claiming gains from changing one parameter without realizing that hidden sources of randomness are the real source of improvement. Reproducibility reduces or eliminates variations when rerunning failed jobs or prior experiments, making it essential in the context of fault tolerance and iterative refinement of models. This capability becomes increasingly important as sophisticated models and real-time data streams push us towards distributed training across clusters of GPUs. This shift not only multiplies the sources of non-determinism but also increases the need for both fault tolerance and iterative model development.

Numerous experts in the deep learning community have already begun to draw attention to the importance of reproducibility, like this excellent post by Pete Warden at Google. However, reproducibility in ML remains elusive, as we illustrate via the example below.

A Day in the Life of a New Data Scientist

You’ve been handed your first project at your new job. The inference time on an existing ML model is too slow, so the team wants you to analyze the performance tradeoffs of a few different architectures. Can you shrink the network and still maintain acceptable accuracy?

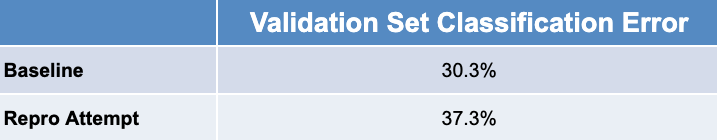

The engineer who developed the original model is on leave for a few months, but not to worry, you’ve got the model source code and a pointer to the dataset. You’ve been told the model currently reports 30.3% error on the validation set and that the company isn’t willing to let that number creep above 33.0%.

You start by training a model from the existing architecture so you’ll have a baseline to compare against. After reading through the source, you launch your coworker’s training script and head home for the day, leaving it to run overnight.

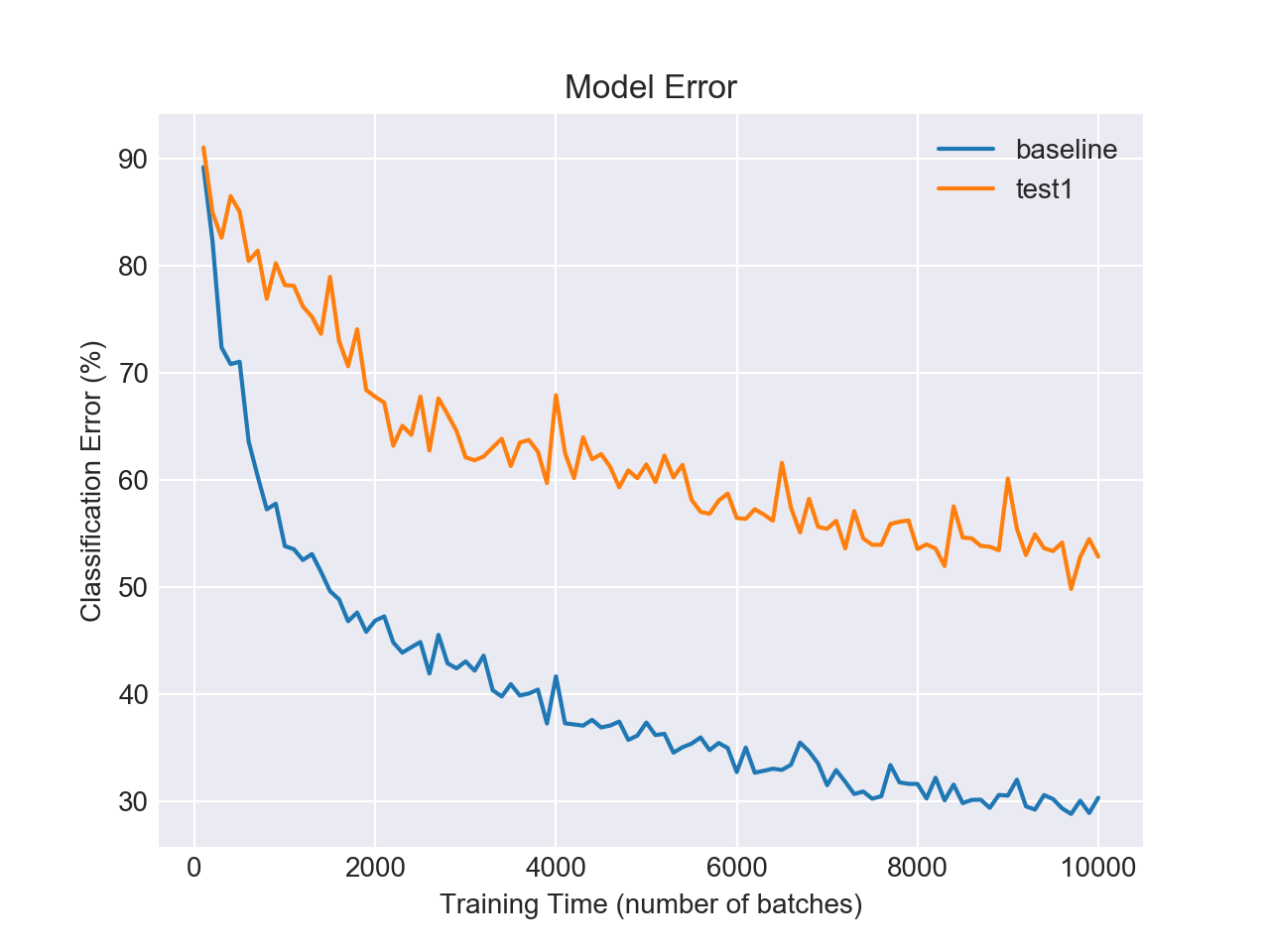

The next day you return to a bizarre surprise: the model is reporting 52.8% validation error after 10,000 batches of training. Looking at the plot of your model’s validation error alongside that of your teammate leaves you scratching your head. How did the error rate increase before you even made any changes?

After some debugging, you find two glaring issues explaining the divergent model performance:

- Additional training data: The team recently added several thousand images to the database. Since the root path to the data remained unchanged, the training script threw no warnings and included the new images in the dataset.

- Inconsistent hyperparameters: The training script included default hyperparameter values (e.g. learning rate, dropout probabilities), but it also allowed users to specify them at runtime. Digging through your coworker’s results, you find the hyperparameters from the best of breed model don’t match the defaults.

Having fixed these problems, you are confident your model, hyperparameters, and dataset now match exactly. You restart the training script, expecting to match the statistical performance of the baseline model. Instead, you see this:

You resist the urge to shout at your computer. Though the difference has narrowed, you’re still seeing a 7% gap in classification error!

Root Causes of Non-Determinism

Unfortunately, several sources contribute to run-to-run variation even when working with identical model code and training data. Here are some of the common causes:

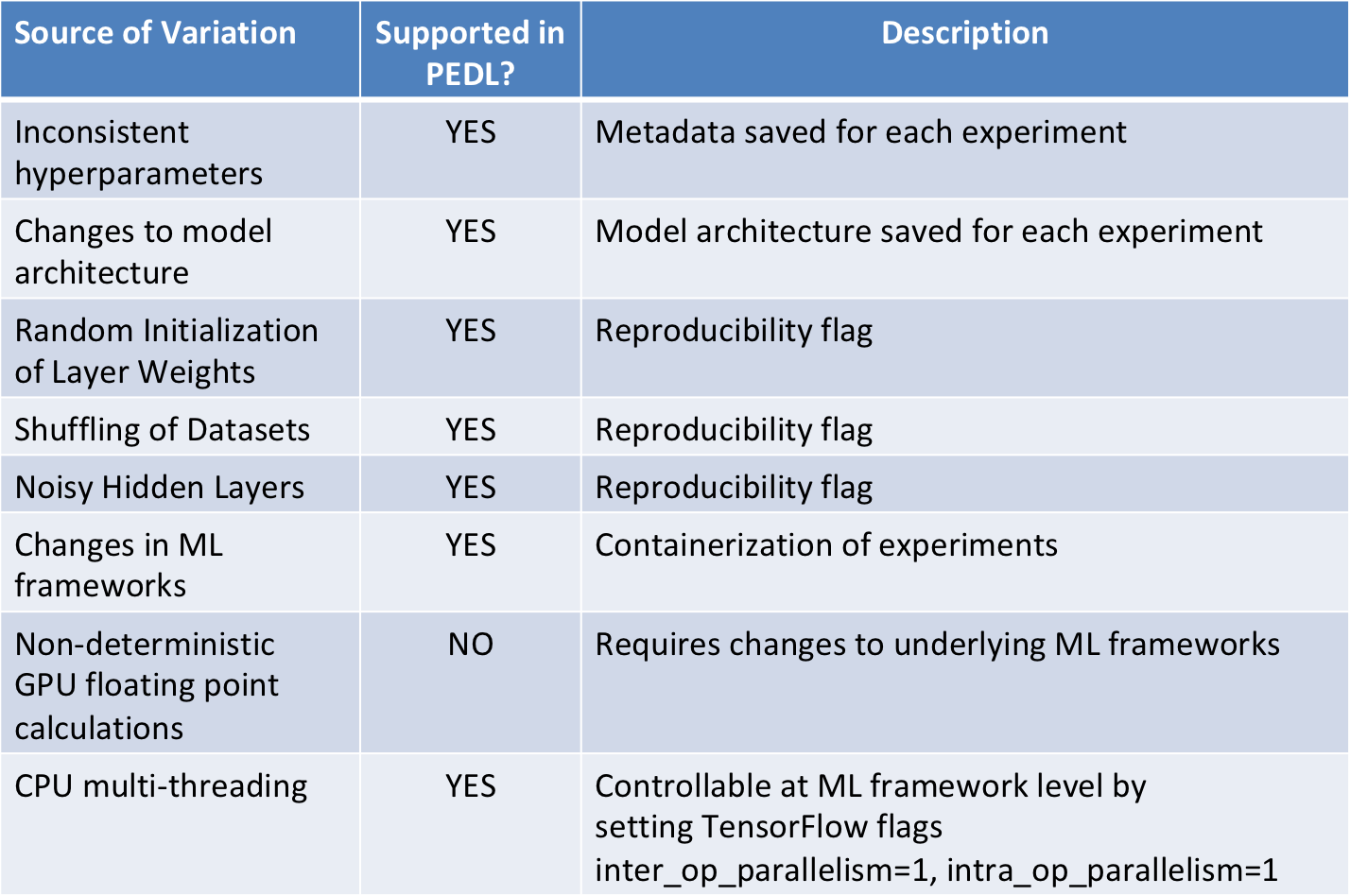

- Random initialization of layer weights: Many ML models set initial weight values by sampling from a particular distribution. This has been shown to increase the speed of convergence over initializing all weights to zeros [1, 2].

- Shuffling of datasets: The dataset is often randomly shuffled at initialization. If the model is written to use a fixed range of the dataset for validation (e.g. the last 10%), the contents of this set will not be consistent across runs. Even if the validation set is fixed, shuffling within the training dataset affects the order in which the samples are iterated over, and consequently, how the model learns.

- Noisy hidden layers: Certain NN architectures include layers with inherent randomness during training. Dropout layers, for example, exclude the contribution of a particular input node with probability p. While this may help prevent overfitting, it means the same input sample will produce different layer activations on any given iteration.

- Changes in ML frameworks: Updates to ML libraries can lead to subtly different behavior across versions, while migrating a model from one framework to another can cause even bigger discrepancies. For example, Tensorflow warns its users to “rely on approximate accuracy, not on the specific bits computed” across versions. Keras will exhibit different behaviors if swapping between Theano and Tensorflow backends without taking the appropriate steps.

- Non-deterministic GPU floating point calculations: Certain functions in cuDNN, the Nvidia Deep Neural Network library for GPUs, do not guarantee reproducibility across runs by default, including several convolutional operations. Furthermore, reproducibility is not guaranteed across different GPU architectures unless these operations are forcibly disabled by your ML library.

- CPU multi-threading: For CPU training, TensorFlow by default configures thread pools with one thread per CPU core to parallelize computation. This parallelization happens both within execution of certain individual ops (intra_op_parallelism) as well as between graph operations deemed independent (inter_op_parallelism). While this speeds up training, the existing implementation introduces non-determinism.

The Path to Reproducibility

To alleviate the frustration of our fictional data scientist, we must invest in making machine learning experiments reproducible. How might we achieve this? Well, we can start by capturing all the metadata associated with an experiment, and systematically addressing the common causes listed above. Determined automatically handles many of these challenges. Furthermore, using our explicit “reproducibility” flag, users can control the randomness affecting batch creation, weights, and noise layers across experiments. Table 1 provides more details as to how Determined tackles the problem of reproducibility.

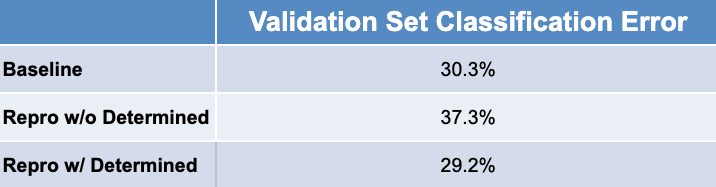

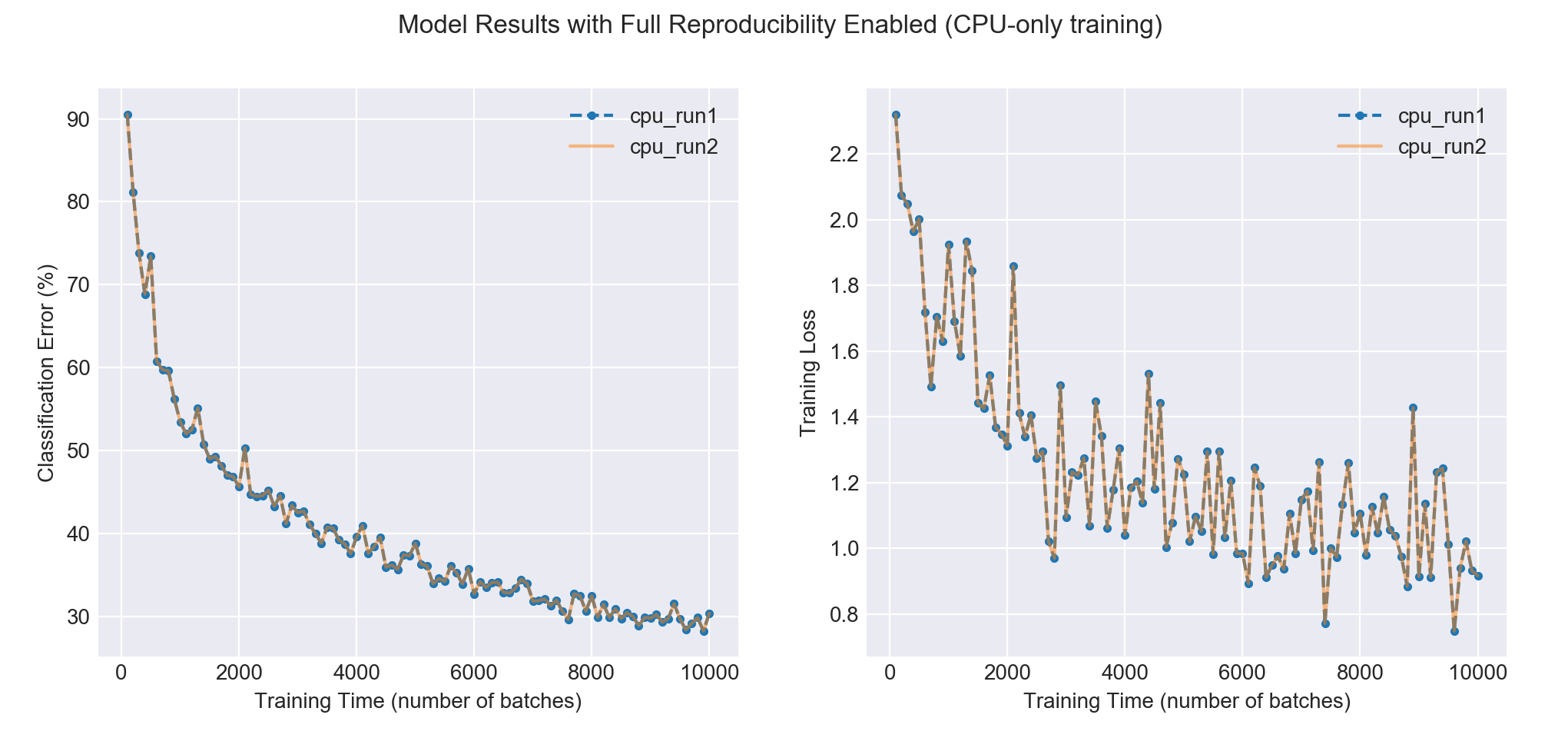

Rerunning our experiment in Determined, here’s what we see:

As we hoped, the resulting validation error is now almost identical to our baseline. The remaining variation is due to the inherent non-determinism in the underlying cuDNN library used during GPU training (see item 5 above). Switching to CPU-only training and disabling multithreading, we see that Determined allows us to duplicate runs exactly, with training loss and validation error matching at each step.

At Determined AI we are passionate about supporting reproducible machine learning workflows. By building first class support for it into our tools, we hope to further increase the visibility of this issue. Given its complexity, fully enabling reproducibility will require effort across the stack - from infrastructure-level developers all the way up to ML framework authors. Ultimately, this will be well worth the investment if we want not just to reproduce, but rather extend, advances in machine learning to date.

Support for reproducibility is just one of the ways Determined makes it easier to build high performance ML models. If you’re interested in making your company’s data science team more productive, contact us to learn more.