Blogs

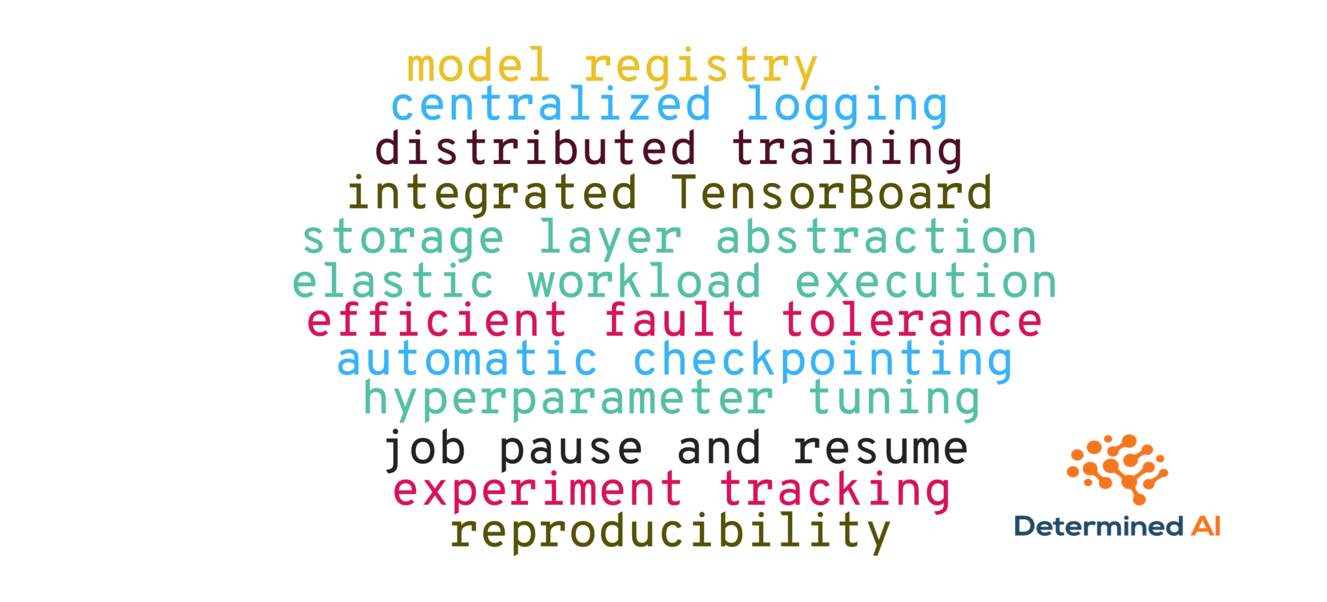

Weekly updates from our team on topics like large-scale deep learning training, cloud GPU infrastructure, hyperparameter tuning, and more.

NOV 24, 2020

Optimizing Horovod with Local Gradient Aggregation

Announcing local gradient aggregation, a new performance optimization for open-source distributed training with Horovod!

NOV 13, 2020

Why Slurm Makes Deep Learning Engineers Squirm

Slurm is a great HPC job scheduler but lacks key deep learning capabilities, such as hyperparameter tuning, distributed training, and experiment management.

NOV 10, 2020

Determined and Kubeflow: Better Together!

How do Determined and Kubeflow compare? Determined is better for deep learning training, but Determined and Kubeflow can also be effectively used together.

NOV 05, 2020

The Deep Learning Tool We Wish We Had In Grad School

Grad school would have been easier if we had access to Determined’s deep learning training platform.

OCT 08, 2020

Why Does No One Use Advanced Hyperparameter Tuning?

Takeaways from our experience building state-of-the-art hyperparameter tuning in Determined AI’s integrated deep learning training platform.

OCT 07, 2020

Train CycleGan on Multiple GPUS with Determined

Dramatically reduce the time to train GANs with Determined AI.

SEP 10, 2020

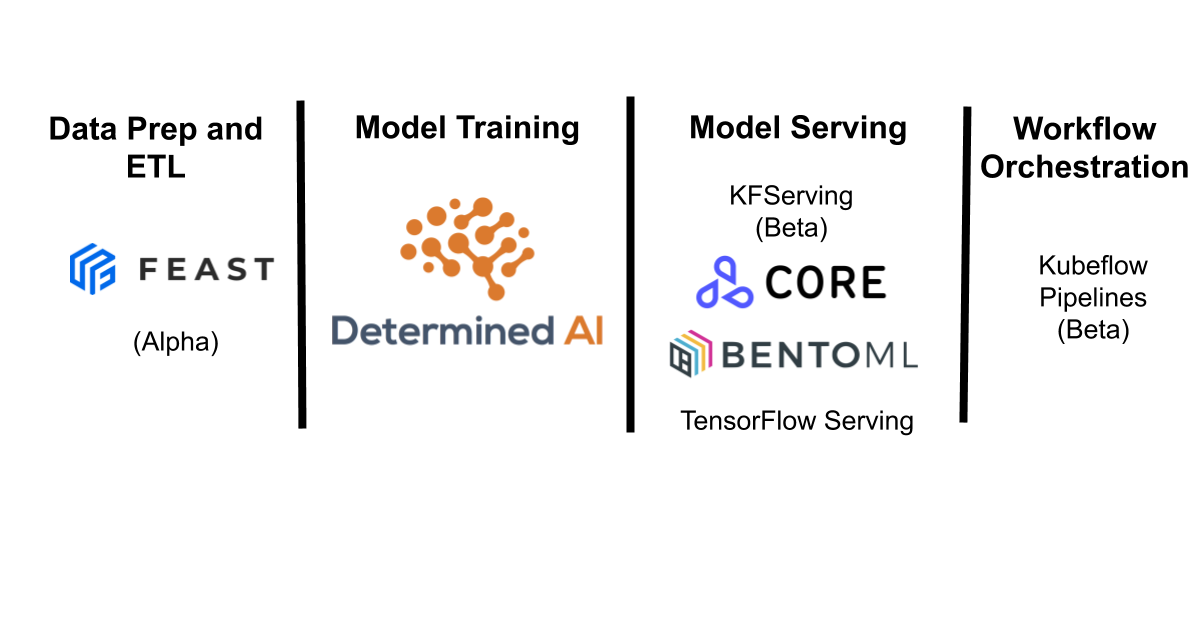

Lightning-fast ML pipelines with Determined and Kubeflow

Learn how to do production-grade MLOps with scalable, automated machine learning training and deployment using Determined, Kubeflow Pipelines, and Seldon Core.

SEP 03, 2020

Faster NLP with Deep Learning: Distributed Training

Training deep learning models for NLP tasks typically requires many hours or days to complete on a single GPU. In this post, we leverage Determined’s distributed training capability to reduce BERT for SQuAD model training time from hours to minutes, without sacrificing model accuracy.

AUG 11, 2020

End-to-End Deep Learning with Spark, Determined, and Delta Lake

How to build an end-to-end deep learning pipeline, including data preprocessing with Spark, versioned data storage with Delta Lake, distributed training with Determined, and batch inference with Spark.

AUG 05, 2020

YogaDL: a better approach to data loading for deep learning models

A better approach to loading data for deep learning models.