How to Build an Enterprise Deep Learning Platform, Part Three

June 24, 2020

Check out an example deep learning platform in action in this Jupyter Notebook!

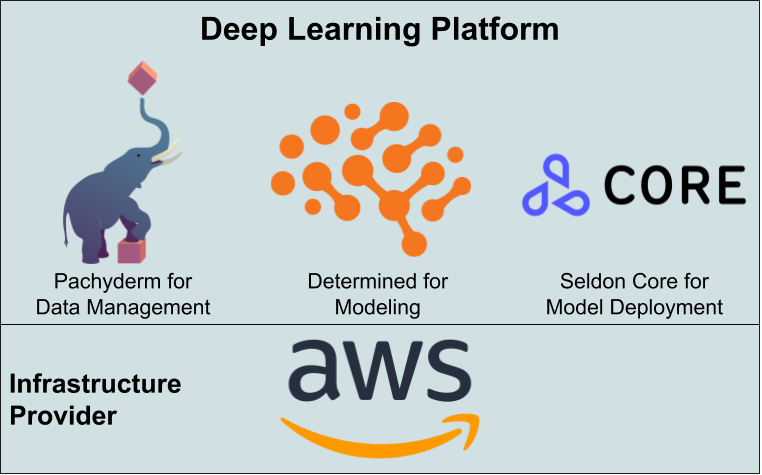

In parts one and two of this series, we discussed why you need advanced tools to scale and accelerate your deep learning team and showed you which open source tools will help fill those gaps. In this post, we’ll stitch together three open source tools into a platform that enables end-to-end deep learning model development.

As introduced in the previous parts, we’ll use a collection of open source tools for our sample platform: Pachyderm for data management, Determined for model development, and Seldon Core for deployment. We’ll install Pachyderm and Seldon on an EKS (kubernetes) cluster backed by S3. We’ll configure Determined to use our AWS account to automatically scale GPU instances to meet our team’s demand.

To see the platform in action, check out this Jupyter notebook example, which walks through:

-

Creating data repositories with Pachyderm and using Pachyderm data pipelines to prepare the data for training

-

Building a model within the Determined platform, and using its efficient hyperparameter tuning to quickly find a high-performing model

-

Building and testing a Seldon Core endpoint using the trained model produced by Determined.

This simple collection of tools (configurable in less than a day, and entirely open source) will greatly ease your ML team’s scaling challenges. They’ll be able to use this platform to generate more, higher-performing models and to quickly scale them to production to generate business value for your company.

If you have any questions about how you can use Determined as a part of your machine learning platform, join our community Slack or feel free to reach out to me directly!