Getting the most out of your GPU cluster for deep learning: part II

August 20, 2018

In this post, we discuss the missing key to fully leveraging your hardware investment: specialized software that understands the unique properties of deep learning workloads.

Finding the Full Deep Learning Solution

In Part I of this blog, we discussed the benefits of configuring a cluster manager for a deep learning GPU cluster. In particular, we discussed DL infrastructure consisting of two principal components: a

- Cluster manager (e.g., Kubernetes, DC/OS) that supports launching containers on GPUs; and a

- Deep learning application framework (e.g., TensorFlow, Keras, PyTorch) that provides APIs for describing, training, and validating a neural network.

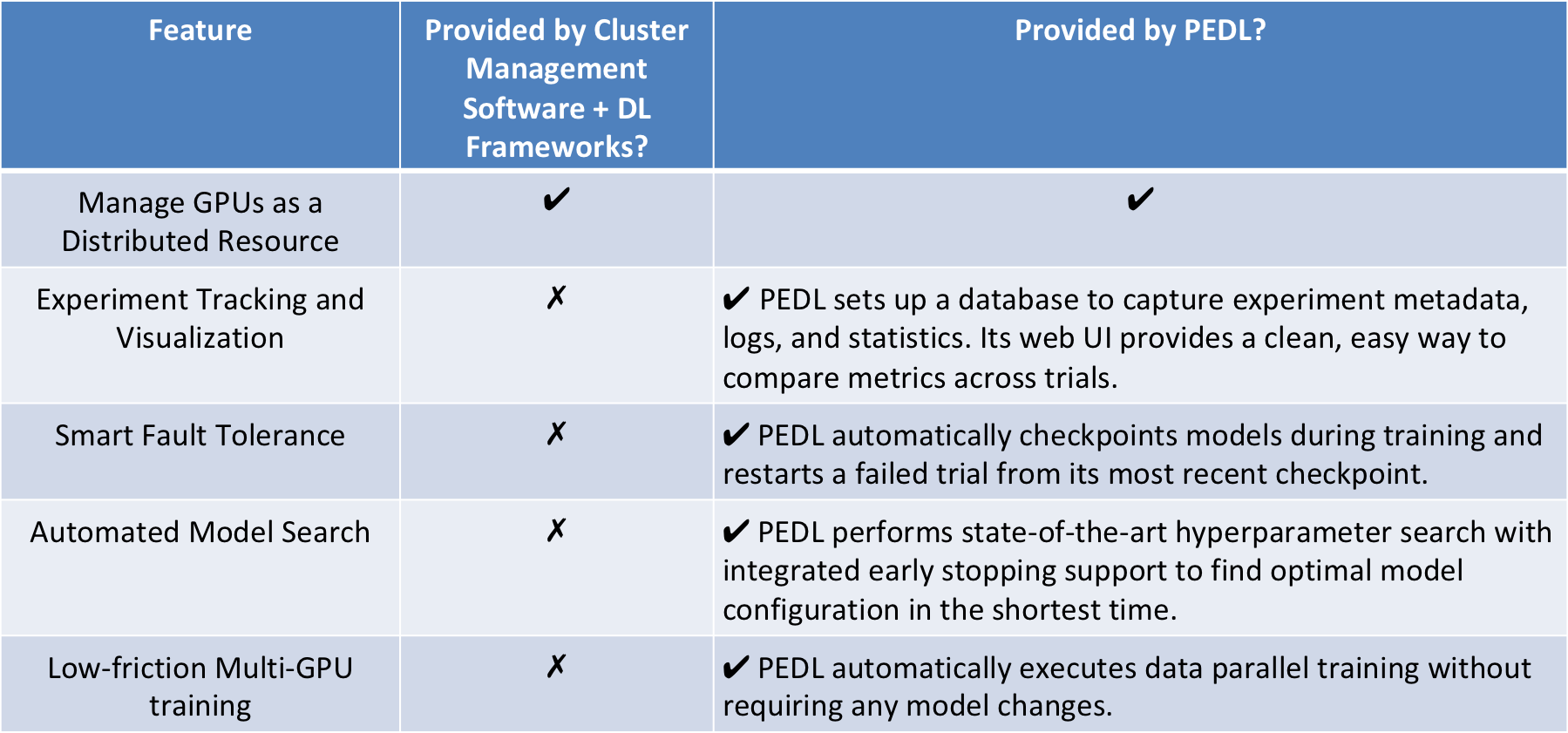

Unfortunately, this combination does not deliver a complete DL solution. Generic cluster managers don’t understand the semantics of deep learning, and DL frameworks primarily define and train a single model. The result? Engineers end up having to augment these tools with their own workarounds for many day-to-day tasks.

What is needed is software that comprehends deep learning model development at scale - something that can provide the glue between your cluster manager and DL framework.

After talking to countless teams that build and deploy deep learning models on a daily basis, we’ve compiled a list of the functionality this ideal software might include.

A DL Team’s Wish List

Experiment Tracking and Visualization

Reproducibility is widely accepted as an important element of DL workflows. Achieving this requires meticulous organization - input parameters, validation accuracy, and other relevant metrics from each experiment must be logged and stored. Unfortunately, if engineers want to retain the output of a containerized training job, they must set up their own persistent storage solution. Otherwise, when the container gets deleted, so do their results.

Setting up this metadata storage requires some forethought as to how to organize it. Simply outputting “logfile.txt” to a generic “results” directory will make comparing results a nightmare a few weeks down the line (assuming they don’t get accidentally overwritten in the meantime). Ideally, the DL engineering workflow would include support for managing metadata and visualizing experiment results.

Automated Model Search

DL engineers may test thousands of variations of their model architecture and hyperparameters to find the configuration that delivers the best performance. Performing this search on a cluster entails manually sending one experiment for each configuration to the cluster or writing scripts to launch a batch of them.

As these trials run, it may make sense to terminate those that appear less promising and start others in their place (a concept known as “early stopping”). Currently, engineers must handle this by manually monitoring job progress, which could potentially involve comparing log files from hundreds or thousands of in-flight tasks. A better solution would automate this search over the experimenter’s parameters of interest.

Smart Fault Tolerance

Run enough experiments and eventually one will crash. While cluster managers do provide fault tolerance, their default policy is to restart failed jobs from scratch. This makes sense given they know nothing about the particular process that crashed. However, in the context of a DL training job, this can end up wasting a lot of time. The smarter approach would be to save training progress periodically and then automatically restart from the last checkpoint after a crash.

Low-Friction Multi-GPU Training

As models and datasets grow, so does training time. One way to offset this is to use multi-GPU training, a technique which splits the work across multiple GPUs on the same machine. The most common approach uses synchronous data parallelism, where each GPU gets a copy of the model and subset of the data. After each device executes its own set of computations, the results are combined to produce weight updates.

While popular frameworks like TensorFlow and Keras support this feature, enabling it is not trivial. Their implementations require significant boilerplate code and careful orchestration. In a perfect world, the only thing required to start a multi-GPU training job would be to specify the desired number of GPUs to train on.

Determined: Deep Learning Training Platform

We started Determined AI with the understanding that there exists a disconnect between current DL infrastructure options and the functionality needed by DL teams. We built our product to address this, designing it from the ground up to handle the real-world challenges of deep learning. As a result, it is able to deliver everything from the above list and more, making it a fully end-to-end solution.

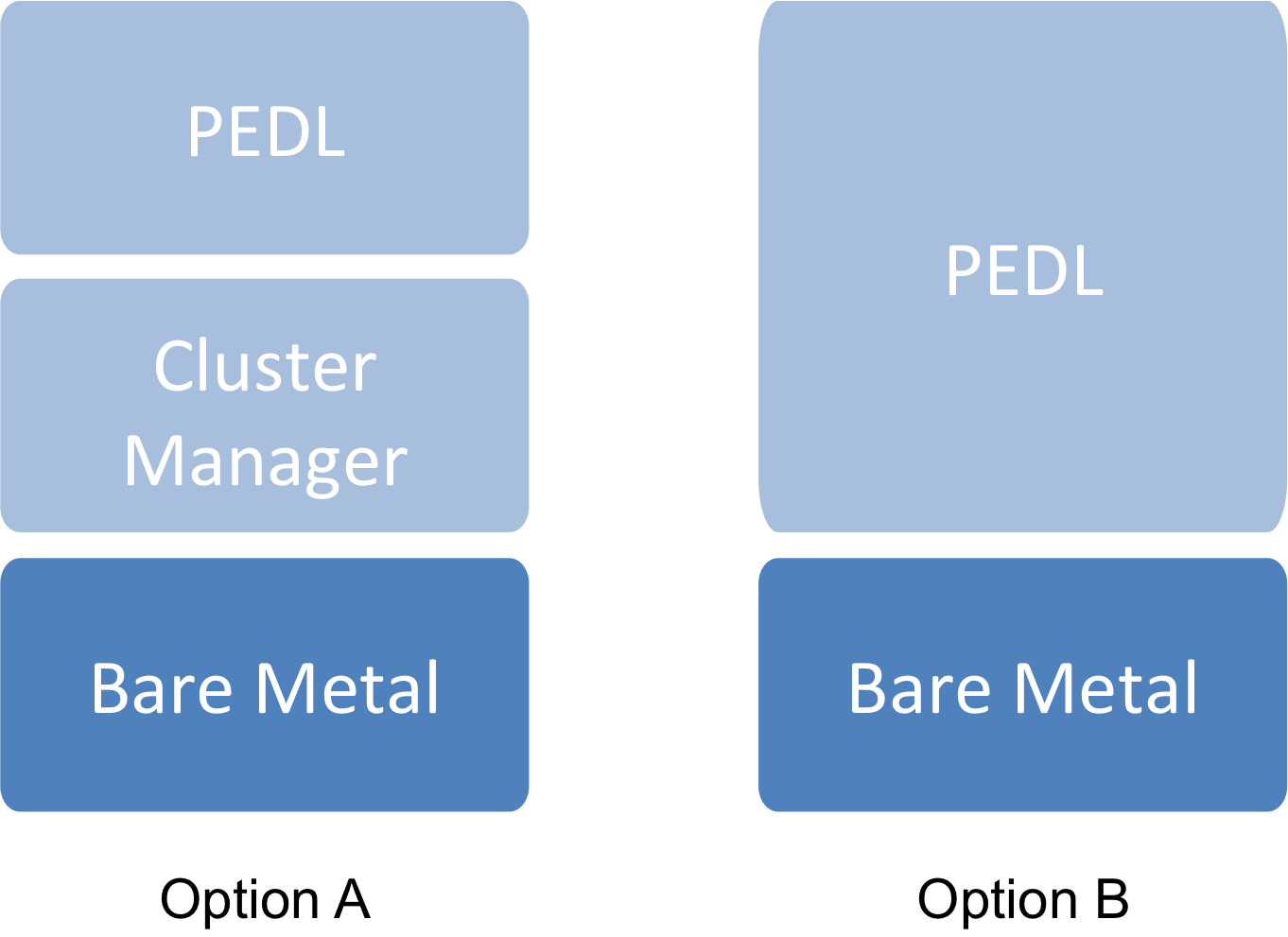

Installing Determined on your GPU cluster is the final step needed to supercharge your deep learning workflow. But what if you’re still managing your hardware via calendar or spreadsheet? Does this mean you have to first figure out how to set up a cluster manager?

Nope! Determined can also be installed directly on bare metal where it will provide all the necessary resource management for you.

Ready to take your deep learning team’s productivity to the next level? Determined is open source – check it out on GitHub to get started!