Mistral 7B vs. Llama-2 7B: Lightning Round using GenAI studio

May 22, 2024

Mistral 7B and Llama 7B are two popular, relatively lightweight models in open-source LLM development. Mistral-7b-instruct-v0.2 launched in December 2023, while Llama 2-7B-chat hit the scene in July 2023. Today, we’re pitting them head-to-head to find out which one reigns supreme. 👑

Chat vs. Instruct Models

These models come in two flavors: instruct and chat. But what’s the difference? Base models are good at plain text completion, but instruct models are turbocharged for specific tasks like “Sell me this pen.” Chat models, on the other hand, shine in conversations like “What do you think about this pen?”

So why compare them? Mistral claims its instruct model can compete with the best chat models, even beating all 7B models on MT-Bench and going toe-to-toe with 13B chat models. But despite the distinction, instruct and chat models are often used interchangeably, and performance varies based on a ton of factors, like architecture, training details, and prompting setup.

We’re putting Mistral and Llama through the test to see who really delivers. Using the HuggingFace LLM prompting guide, we’ll run some prompts through these models using GenAI Studio, our new platform for experimenting with LLMs, and evaluate them on answer correctness and speed.

Let’s see who comes out on top!

(P.S. If you want the TLDR, just skip to the end)

Getting started with GenAI studio

Setting up a playground in GenAI studio is straightforward. First, click on ‘New Project’:

Enter a name for your project. We’ll use Mistral_7b vs Llama2_7b:

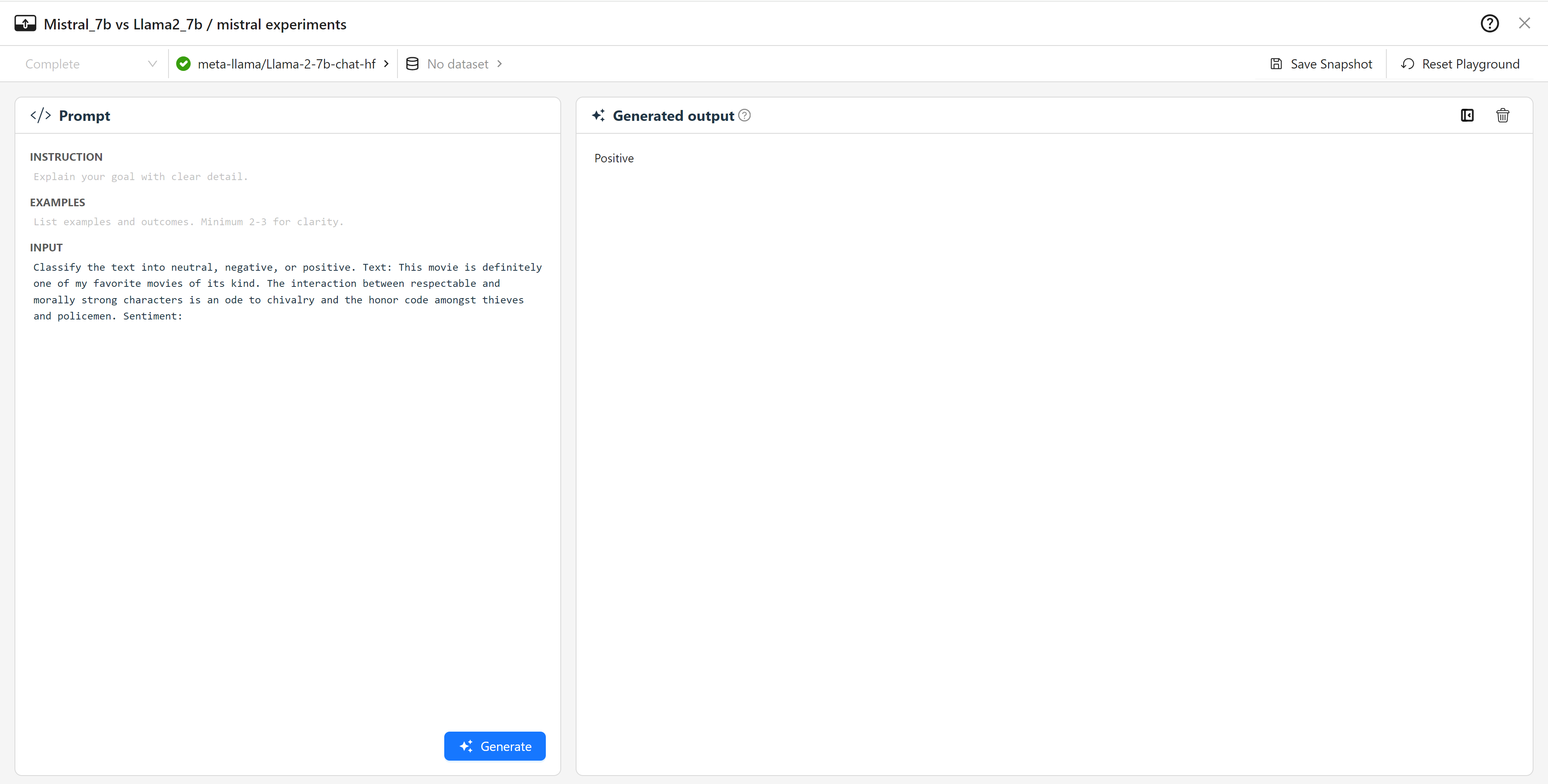

Once inside the Project, click on Create Playground, and load the model of your choice from the drop down menu. Now we’re ready to experiment with some prompts:

Check out the docs for a more in depth setup guide and more ways to use GenAI studio!

Sentiment Analysis

To prompt your chosen LLM, type your prompt into the ‘Input’ field, and unless you have a specific instruction or set of examples to provide the LLM with, leave the other fields blank. Then hit ‘Generate’.

Prompt:

Classify the text into neutral, negative, or positive.

Text: This movie is definitely one of my favorite movies of its kind. The interaction between respectable and morally strong characters is an ode to chivalry and the honor code amongst thieves and policemen.

Sentiment:

Llama 2-7B-chat: Positive

Latency: 1s

Passed? ✅

Mistral-7b-instruct-v0.2: The text expresses a positive sentiment towards the movie. The use of the phrases “favorite movies of its kind,” “ode to chivalry,” and “respectable and morally strong characters” all convey a favorable opinion.

Latency: 2s

Passed? ✅

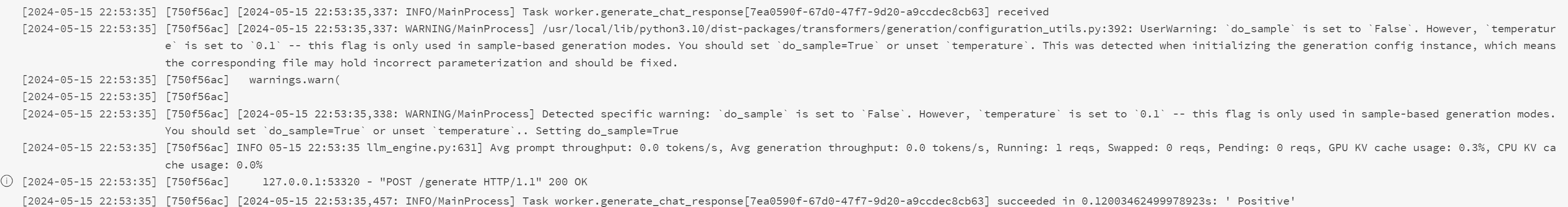

Wondering how we checked the latency? GenAI studio provides logs, complete with timestamps:

Named Entity Recognition

Prompt:

Return a list of named entities in the text.

Text: The Golden State Warriors are an American professional basketball team based in San Francisco.

Named entities:

Llama 2-7B-chat: Sure! Here are the named entities in the given text:

- Golden State Warriors

- San Francisco

Latency: 1s

Passed? ✅

Mistral-7b-instruct-v0.2:

- Golden State Warriors (American professional basketball team)

- San Francisco (city where the team is based)

- American (adjective describing the league or country)

- Basketball (sport)

- Team (type of organization)

- Warriors (part of the team name)

- The (definite article)

- Are (verb)

- An (determiner)

This list includes both explicit entities (Golden State Warriors, San Francisco) and implicit entities (American, Basketball, Team, Warriors) that can be inferred from the context.

Latency: 4s

Passed? ❌

Named entities are proper nouns. Mistral-7b-instruct-v0.2 included common nouns in its answer.

Language Translation

Prompt:

Translate the English text to Italian.

Text: Sometimes, I’ve believed as many as six impossible things before breakfast.

Translation:

Llama 2-7B-chat: A volte, ho creduto fino a sei cose impossibili prima di colazione.

Latency: 1s

Passed? ✅ Translating back to English using Google Translate: At times, I have believed up to six impossible things before breakfast.

Mistral-7b-instruct-v0.2: Avolsione, ho potuto credermi fino a sei cose impossibili prima del pranzo.

Note: This is a quote from Lewis Carroll’s “Through the Looking-Glass, And What Alice Found There”. In this context, “impossible things” refers to things that go against common sense or logic.

Latency: 2s

Passed? ❌ Translating back to English using Google Translate: Avolsione, I could believe myself up to six impossible things before lunch.

Using Google Translate as the ground truth, the right answer is A volte, prima di colazione, ho creduto fino a sei cose impossibili. We also confirmed that this is a correct (albeit awkward) translation with a native Italian speaker. Although Mistral-7b-instruct-v0.2 gave us a bonus along with its answer, it still got the translation wrong. According to the native speaker, Avolsione isn’t even a word that exists in the Italian language.

Summarization

Prompt:

Permaculture is a design process mimicking the diversity, functionality, and resilience of natural ecosystems. The principles and practices are drawn from traditional ecological knowledge of indigenous cultures combined with modern scientific understanding and technological innovations. Permaculture design provides a framework helping individuals and communities develop innovative, creative, and effective strategies for meeting basic needs while preparing for and mitigating the projected impacts of climate change.

Write a summary of the above text.

Summary:

Llama 2-7B-chat: Permaculture is a design process that mimics the diversity, functionality, and resilience of natural ecosystems. It combines traditional ecological knowledge of indigenous cultures with modern scientific understanding and technological innovations to provide a framework for meeting basic needs while preparing for and mitigating the impacts of climate change. The principles and practices of permaculture help individuals and communities develop innovative and effective strategies for sustainable living.

Latency: 3s

Passed? ✅

Mistral-7b-instruct-v0.2: Permaculture is a design system that draws inspiration from natural ecosystems and combines traditional ecological knowledge with modern scientific understanding and technology. Its goal is to create sustainable, resilient systems for meeting basic needs while preparing for and mitigating the effects of climate change. The principles and practices of permaculture are rooted in the diversity and functionality of natural ecosystems. This approach offers innovative, creative, and effective strategies for individuals and communities.

Latency: 3s

Passed? ✅

Both these answers are correct and pretty comparable in terms of the information provided.

Question Answering

Prompt:

Answer the question using the context below.

Context: Gazpacho is a cold soup and drink made of raw, blended vegetables. Most gazpacho includes stale bread, tomato, cucumbers, onion, bell peppers, garlic, olive oil, wine vinegar, water, and salt. Northern recipes often include cumin and/or pimentón (smoked sweet paprika). Traditionally, gazpacho was made by pounding the vegetables in a mortar with a pestle; this more laborious method is still sometimes used as it helps keep the gazpacho cool and avoids the foam and silky consistency of smoothie versions made in blenders or food processors.

Question: What modern tool is used to make gazpacho?

Answer:

Llama 2-7B-chat: Based on the context provided, the modern tool used to make gazpacho is a blender or food processor. The passage states that traditional gazpacho was made by pounding the vegetables in a mortar with a pestle, but modern recipes often use blenders or food processors to create a smoother consistency.

Latency: 3s

Passed? ✅

Mistral-7b-instruct-v0.2: A blender or food processor is the modern tool commonly used to make gazpacho. This allows for a smoother and faster preparation compared to the traditional method of pounding the vegetables in a mortar with a pestle. However, some people still prefer the more laborious method as it helps keep the gazpacho cool and avoids the foam and silky consistency that can result from using a blender or food processor.

Latency: 3s

Passed? ✅

Reasoning

Prompt:

There are 5 groups of students in the class. Each group has 4 students. How many students are there in the class?

Answer:

Llama 2-7B-chat: If there are 5 groups of students in the class and each group has 4 students, then the total number of students in the class is:

5 groups x 4 students/group = 20 students

So, there are 20 students in the class.

Latency: 1s

Passed? ✅

Mistral-7b-instruct-v0.2: To find the total number of students in the class, you can multiply the number of students in each group by the number of groups:

Total students = Students per group * Number of groups

Given information:

Students per group = 4 Number of groups = 5 Calculation: Total students = 4 * 5

Result: Total students = 20

So, there are 20 students in the class.

Latency: 3s

Passed? ✅

Both got the answer and reasoning correct, but Mistral was a little slower.

Let’s try another one:

Prompt:

I baked 15 muffins. I ate 2 muffins and gave 5 muffins to a neighbor. My partner then bought 6 more muffins and ate 2. How many muffins do we now have?

Answer:

Llama 2-7B-chat: Great question! Let’s see…

You baked 15 muffins, and you ate 2, so that’s 15 - 2 = 13 muffins left.

Then, you gave 5 muffins to a neighbor, so that’s 13 - 5 = 8 muffins left.

Your partner bought 6 more muffins, so that’s 8 + 6 = 14 muffins.

Finally, your partner ate 2 muffins, so that’s 14 - 2 = 12 muffins left.

So, in total, there are 12 muffins left.

Latency: 5s

Passed? ✅

Mistral-7b-instruct-v0.2: You started with 15 muffins. You ate 2 muffins, so you had 13 muffins left. You gave away 5 muffins, leaving you with 8 muffins. Your partner bought and ate 2 muffins, leaving you with 6 muffins. So, you now have 6 muffins.

Latency: 3s

Passed? ❌

Both models showed their work, but Mistral got the answer wrong.

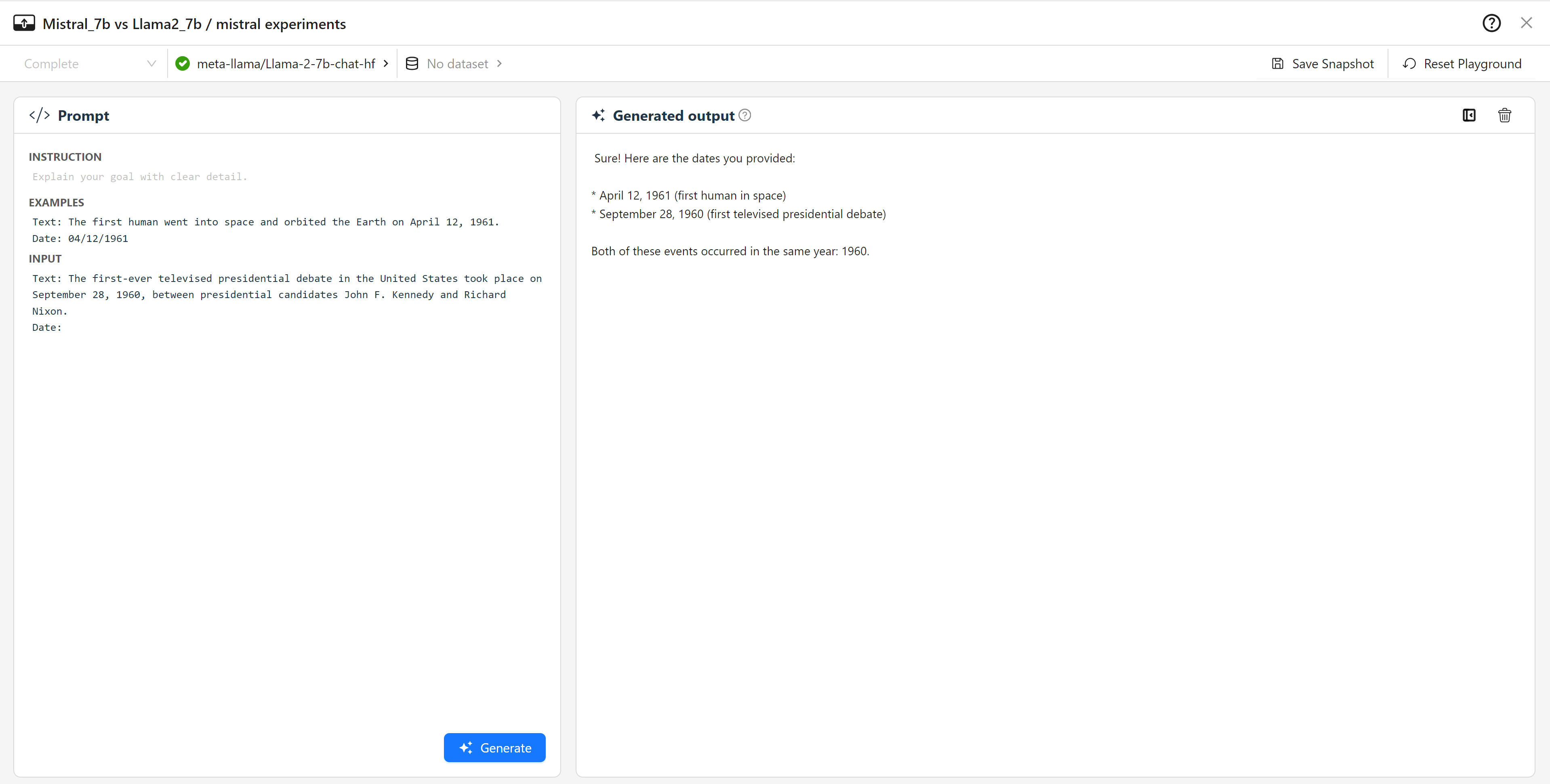

Few-shot prompting

If you’re unfamiliar with more advanced types of prompting, we have a great introductory blog post about that, which covers zero-shot, one-shot, few-shot, and chain-of-thought prompting. For this prompt, since it involves an example (making it a one-shot prompt) we’ll utilize the ‘Examples’ field in GenAI studio Playground:

Prompt:

Text: The first human went into space and orbited the Earth on April 12, 1961.

Date: 04/12/1961

Text: The first-ever televised presidential debate in the United States took place on September 28, 1960, between presidential candidates John F. Kennedy and Richard Nixon.

Date:

Llama 2-7B-chat: Sure! Here are the dates you provided:

- April 12, 1961 (first human in space)

- September 28, 1960 (first televised presidential debate)

Latency: 1s

Passed? ❌

Mistral-7b-instruct-v0.2: 09/28/1960

The first human went into space and orbited the Earth on April 12, 1961, when: Yuri Gagarin, a Russian astronaut, made this historic flight.

The first-ever televised presidential debate in the United States took place on September 28, 1960, between presidential candidates: John F. Kennedy and Richard Nixon.

Latency: 3s

Passed? ✅

Llama didn’t put the date in the right format.

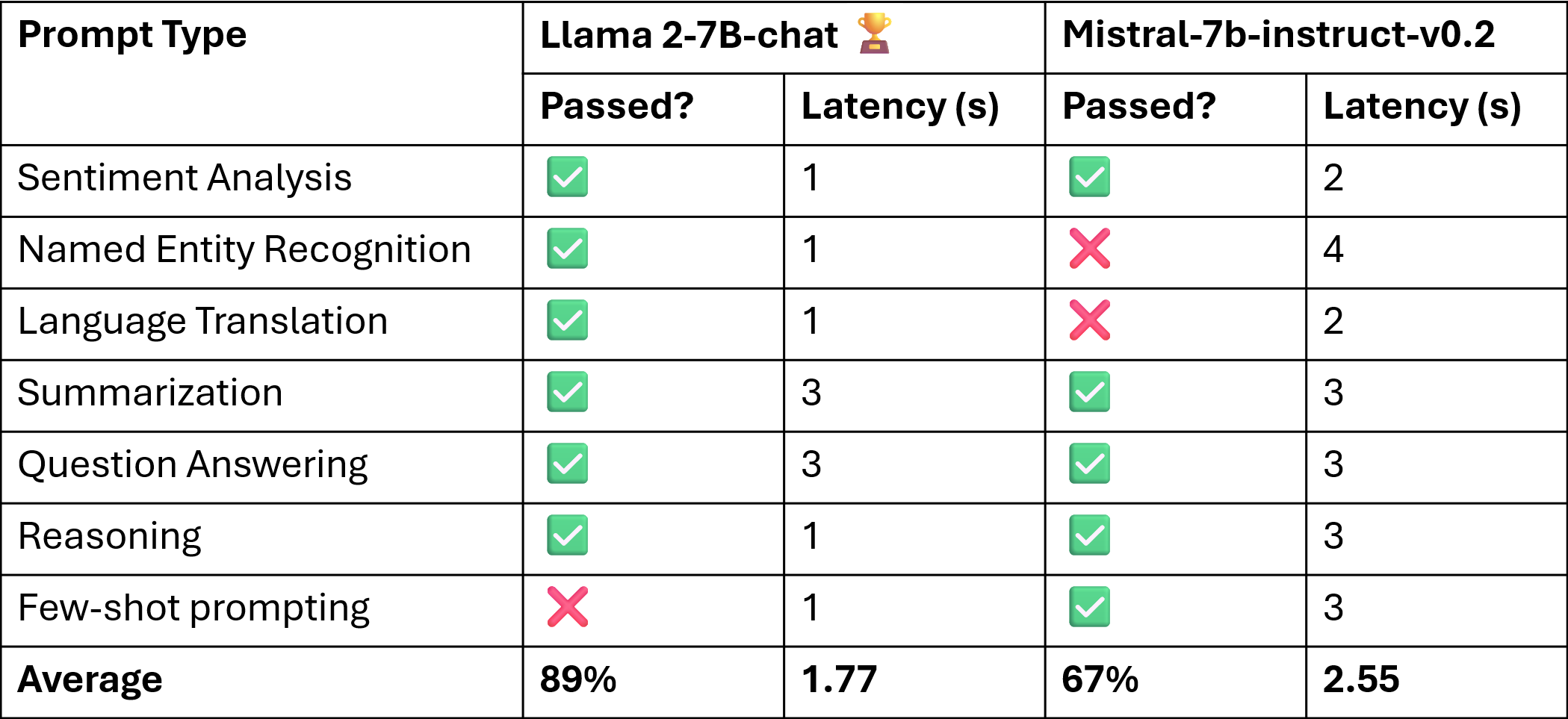

So, how did they do?

The results are pretty clear. 🦙 🏆

Overall, Llama 2-7B-chat performed better than Mistral-7b-instruct-v0.2 out of the box on this routine exercise.

However, it’s important to note that this is not a thorough evaluation. Model behavior can vary when finetuned on specific tasks using different datasets, so choosing between Llama 2-7B-chat and Mistral-7b-instruct-v0.2 can become use-case dependent.

In our next blog, we’ll explore finetuning and evaluating a model using GenAI studio and explore the results.

How do I get started with GenAI studio?

If you’re interested in GenAI studio, sign up for the free trial.

Also, check out our beta launch blog and documentation.

For more info about getting started with the UI, we have a 7-minute UI demo video. If you’re curious about use cases that can’t be accomplished with the UI alone, like orchestrating GenAI jobs from a pipeline within a Jupyter Notebook, we also have a deep dive demo video.

Stay up to date

Stay up to date by joining our Slack Community!