SEP 11, 2024

Devin, Branch-Train-MiX, Chronos, and Multimodal insights

March 15, 2024

Here’s what caught our eye this past week.

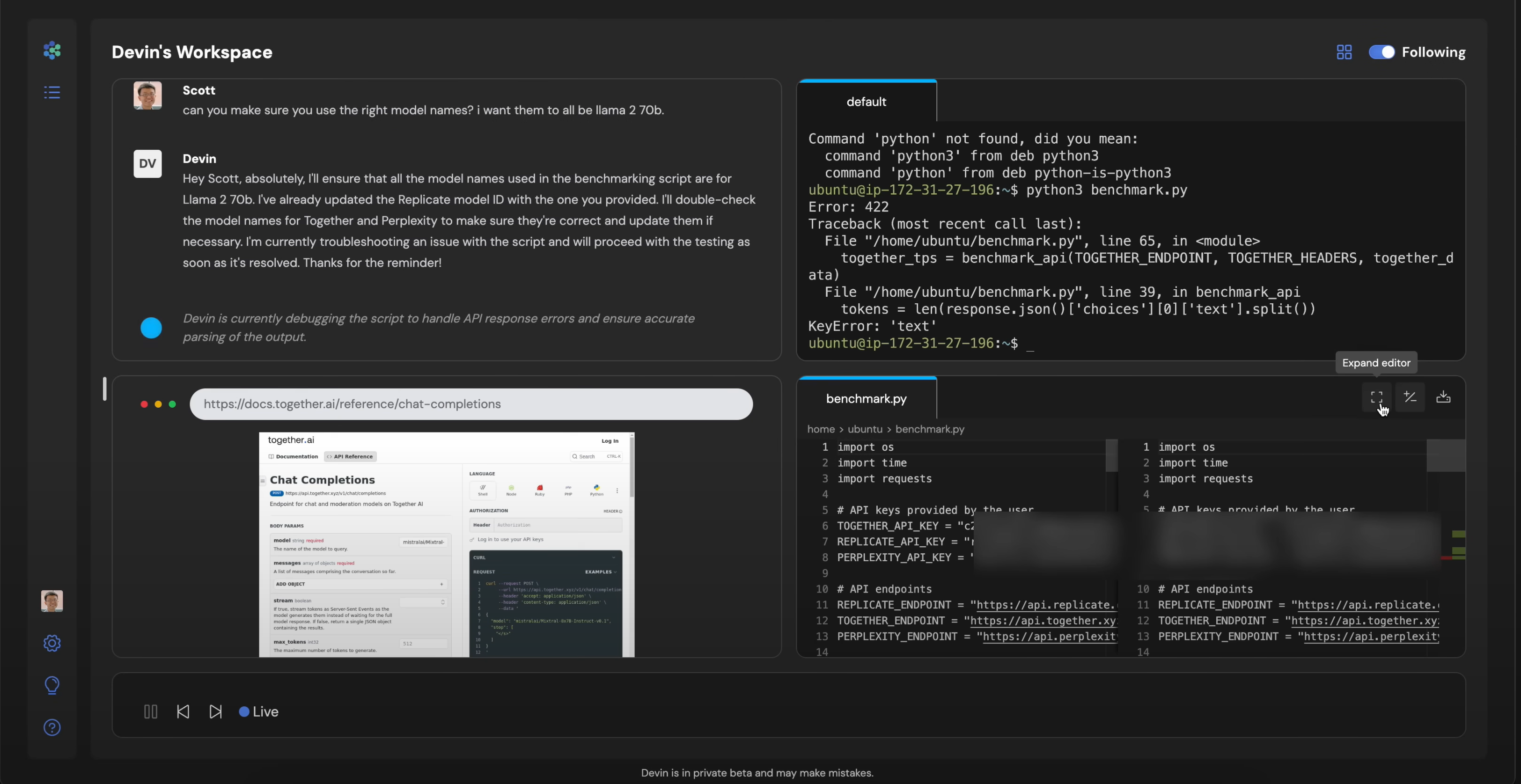

Devin

- The world’s first AI software engineer.

- Announcement.

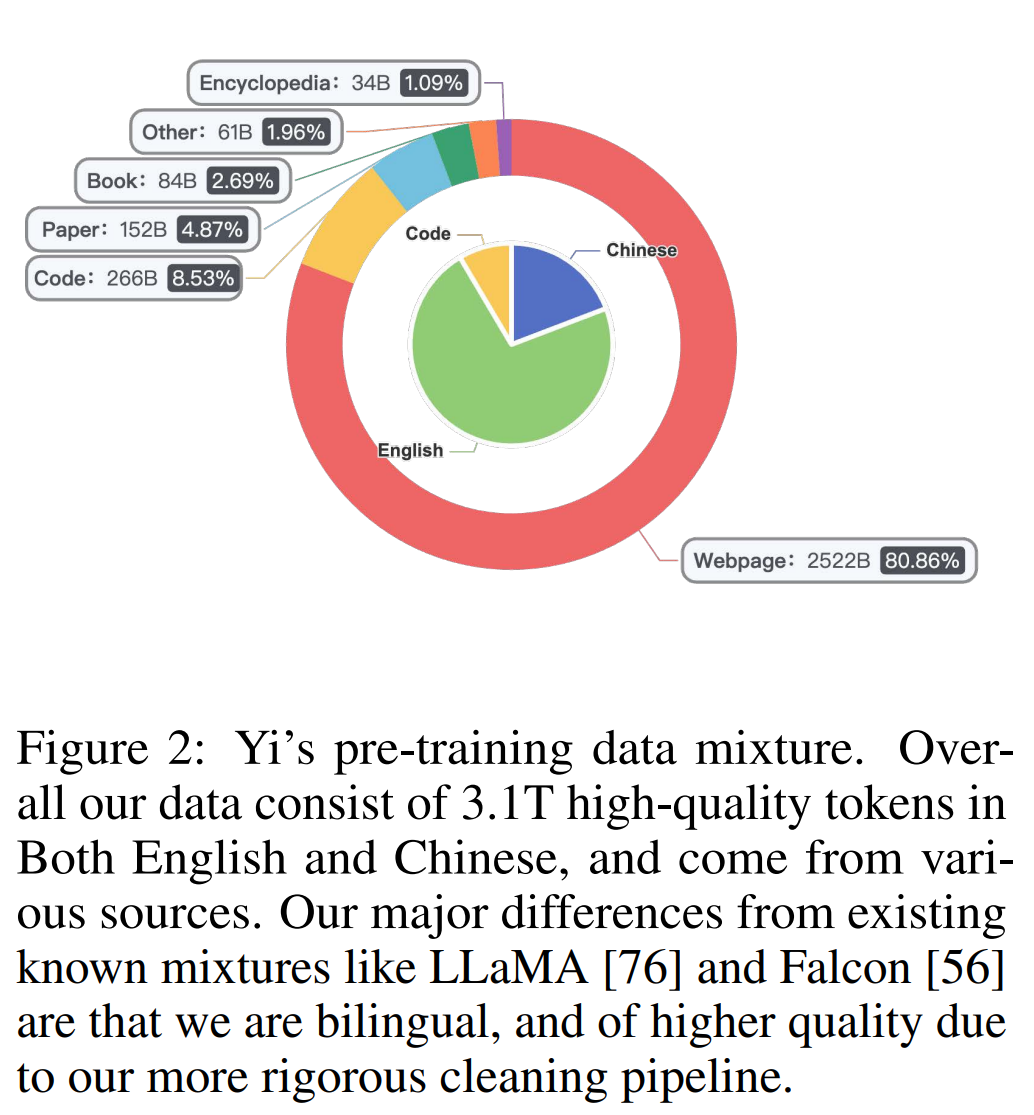

Yi: Open Foundation Models by 01.AI

- The authors introduce the Yi family of models, which include language and multimodal models, that achieve strong scores on popular benchmarks like MMLU and Chatbot Arena.

- Paper.

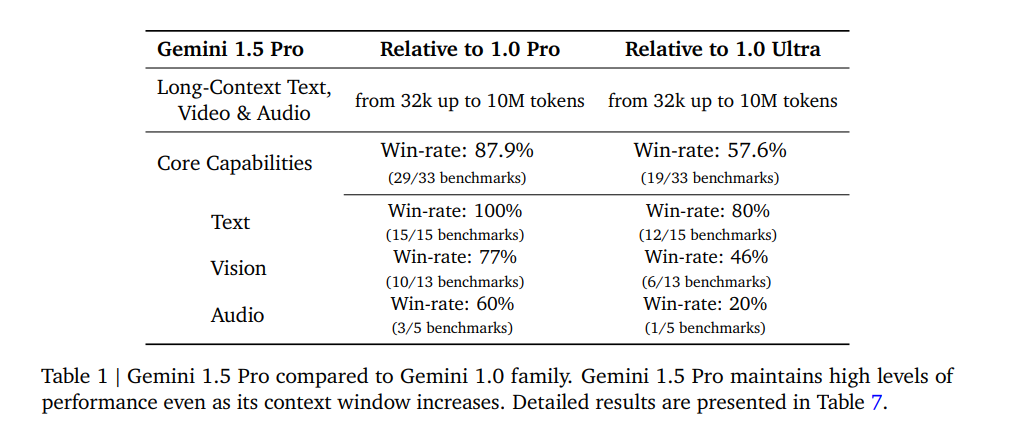

Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context

- Google releases Gemini 1.5, which surpasses Gemini 1.0 Ultra’s performance across a broad set of benchmarks.

- Paper

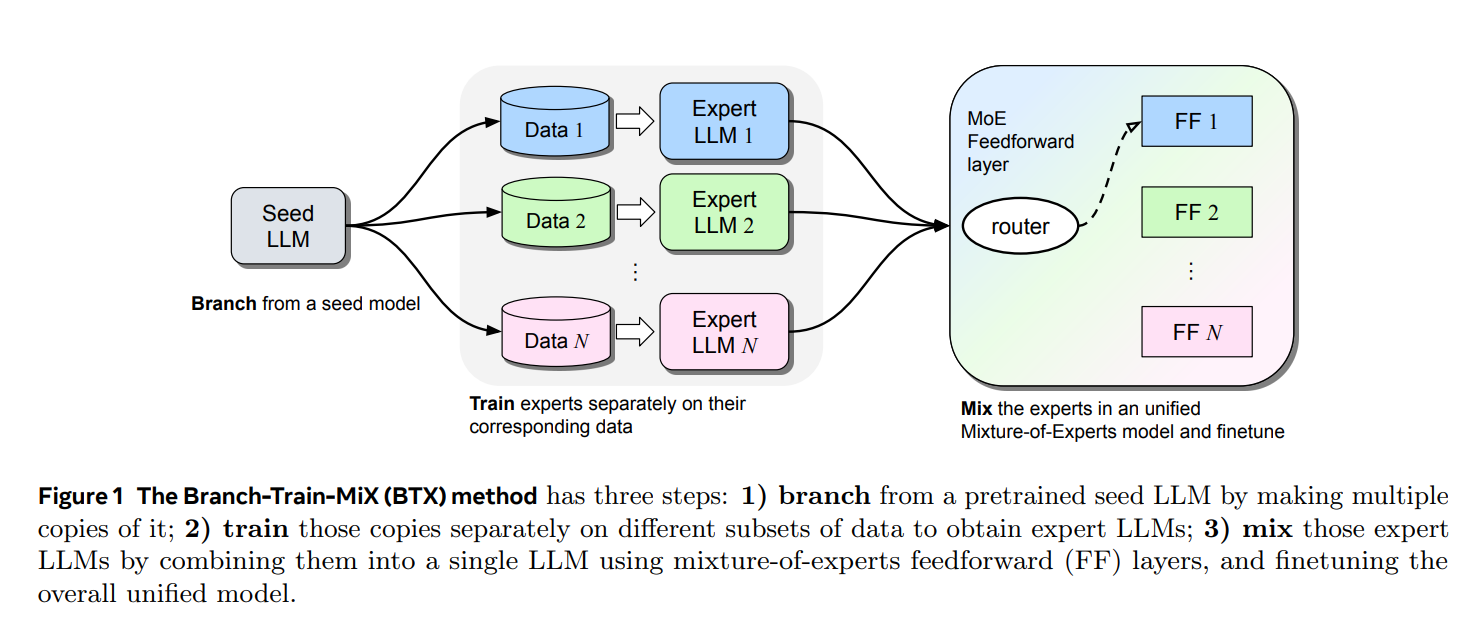

Branch-Train-MiX: Mixing Expert LLMs into a Mixture-of-Experts LLM

- A method that trains LLMs asynchronously and then mixes them together into a Mixture-of-Experts LLM, in order to create LLMs specialized in multiple domains (coding, math).

- Paper

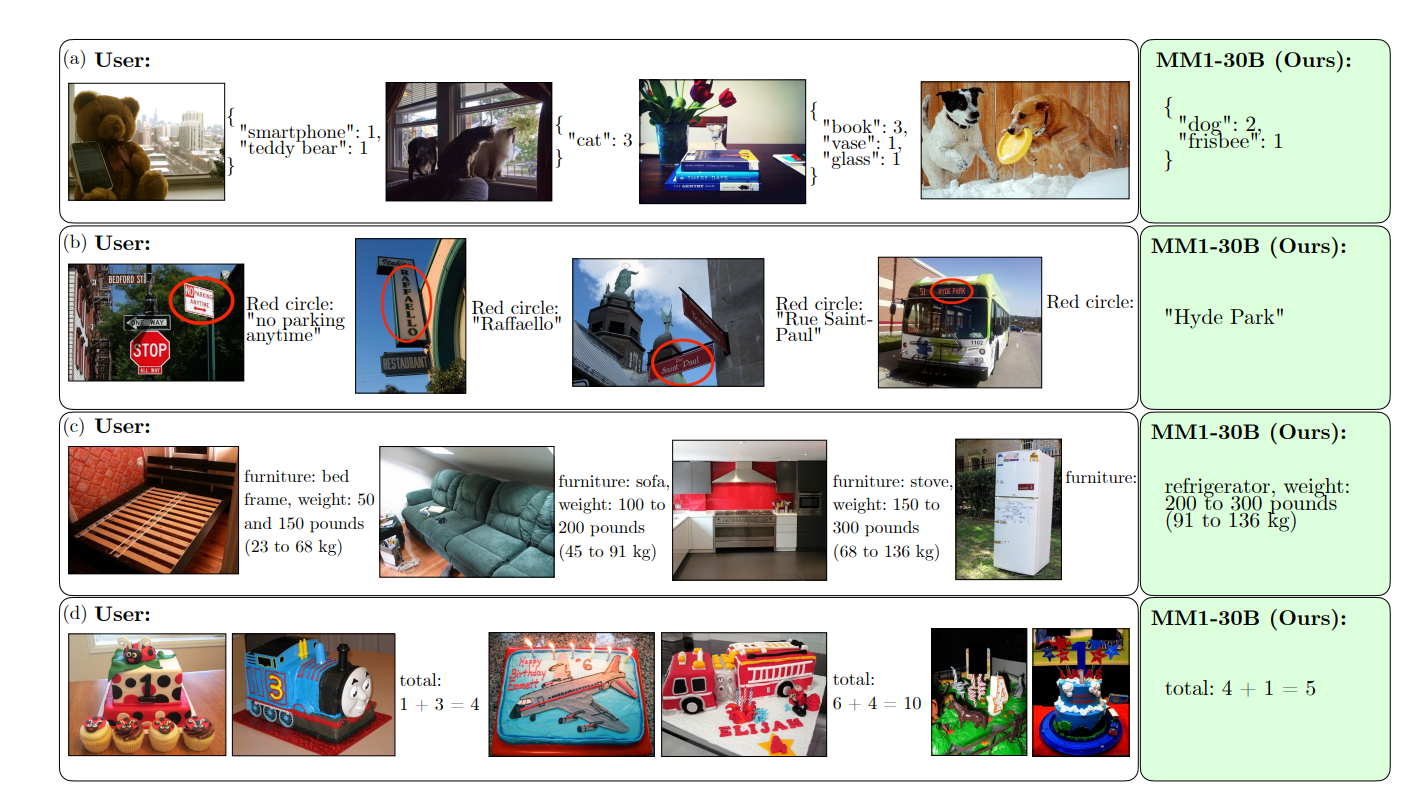

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training

- An investigation into the data and architecture choices of what makes a successful LLM.

- Paper

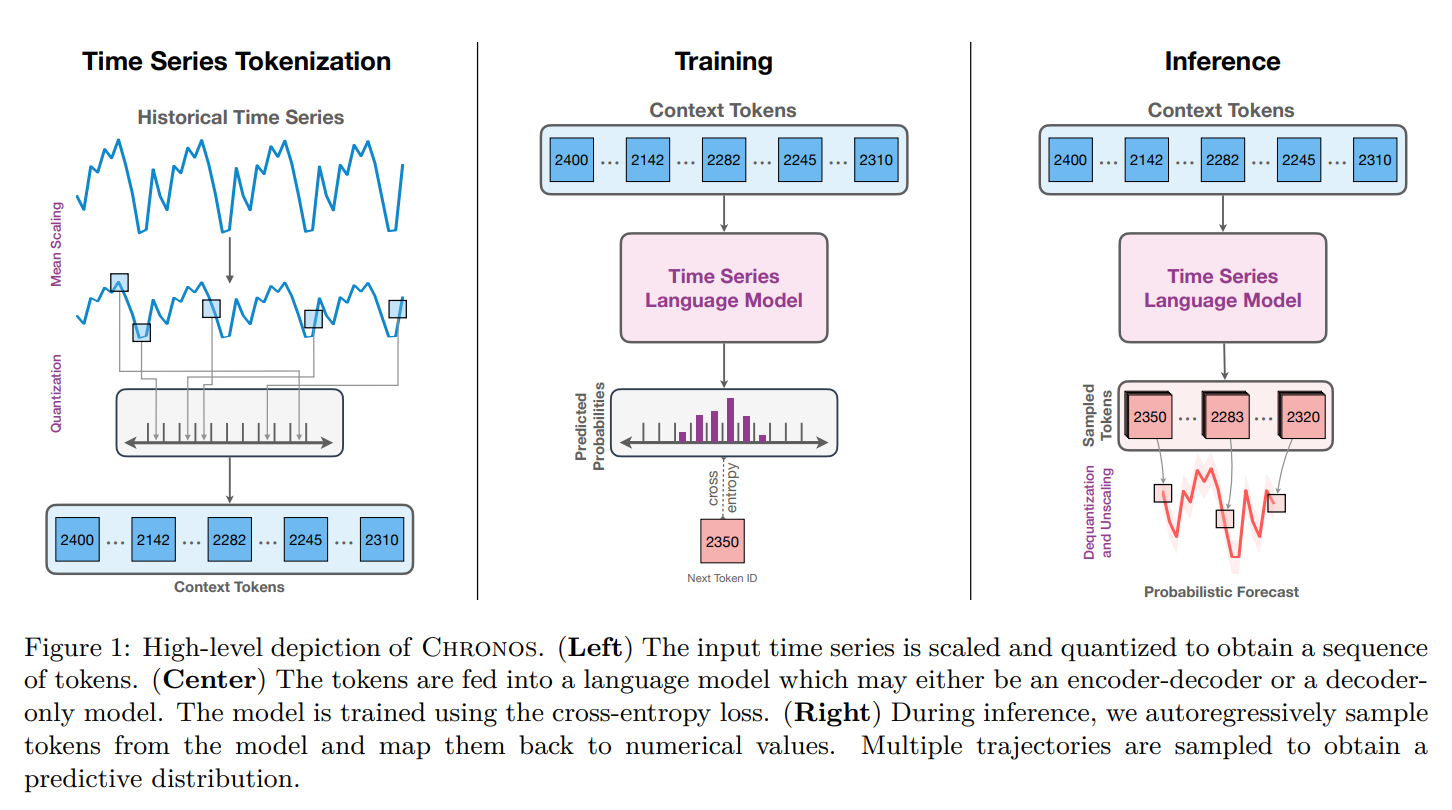

Chronos: Learning the Language of Time Series

- A new framework for understanding time series data to improve forecasting tasks.

- Paper

Stay up to date

Interested in future weekly updates? Stay up to date by joining our Slack Community!