SEP 11, 2024

AI News #17

April 01, 2024

Here’s what caught our eye this past week.

OpenAI releases Sora collaborations with artists

- OpenAI has been working with visual artists to understand how Sora helps in their creative process. Artists find that Sora lets them prototype brand new ideas with fewer technical and creative constraints, faster than ever before.

- Announcement

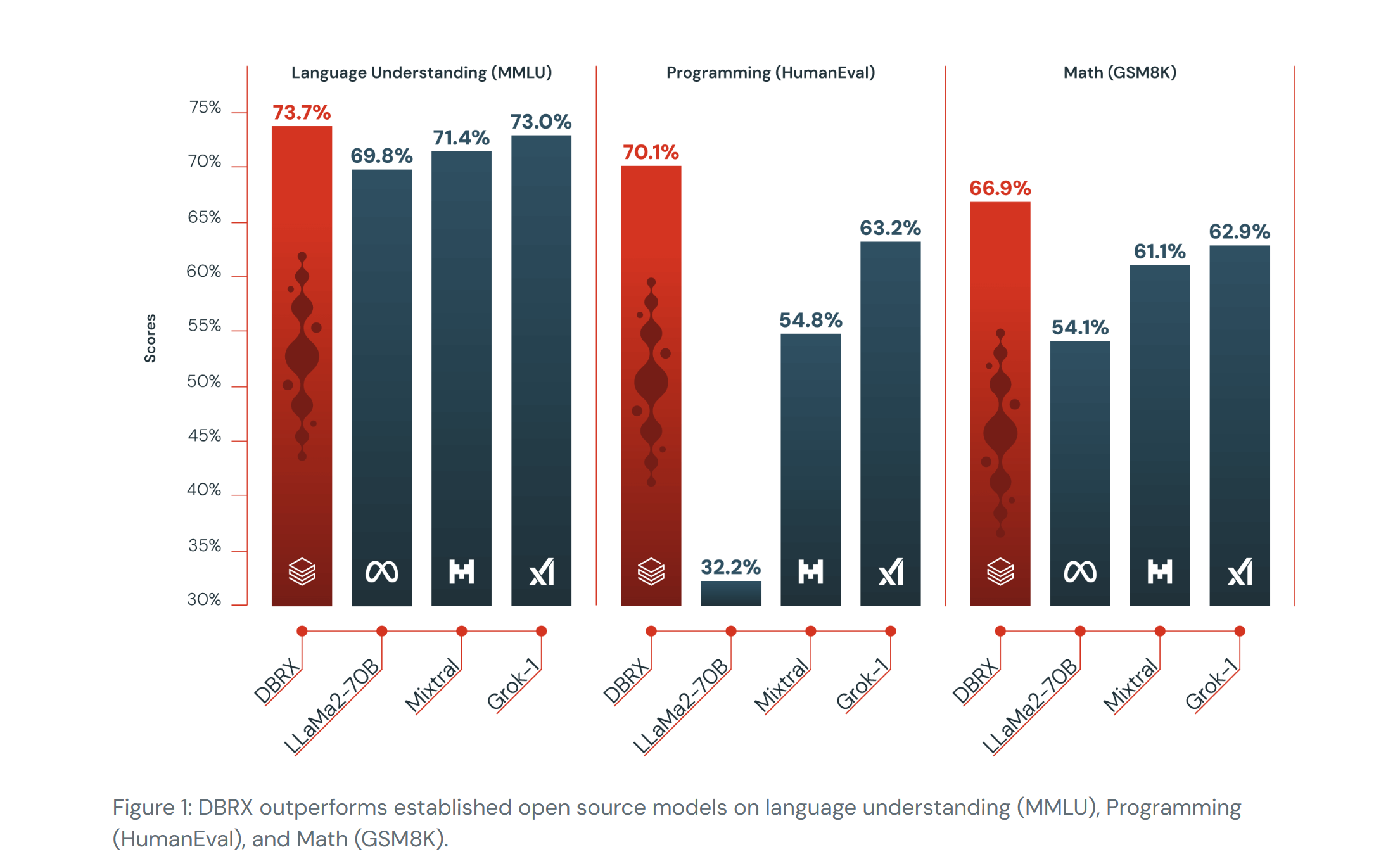

DBRX announced by Databricks

- DBRX, the latest state-of-the-art open LLM, is a mixture-of-experts (MoE) model that uses a larger number of smaller experts than models like Mixtral and Grok-1. It was pre-trained on 12T tokens of text and code data and has 132B total parameters.

- Announcement

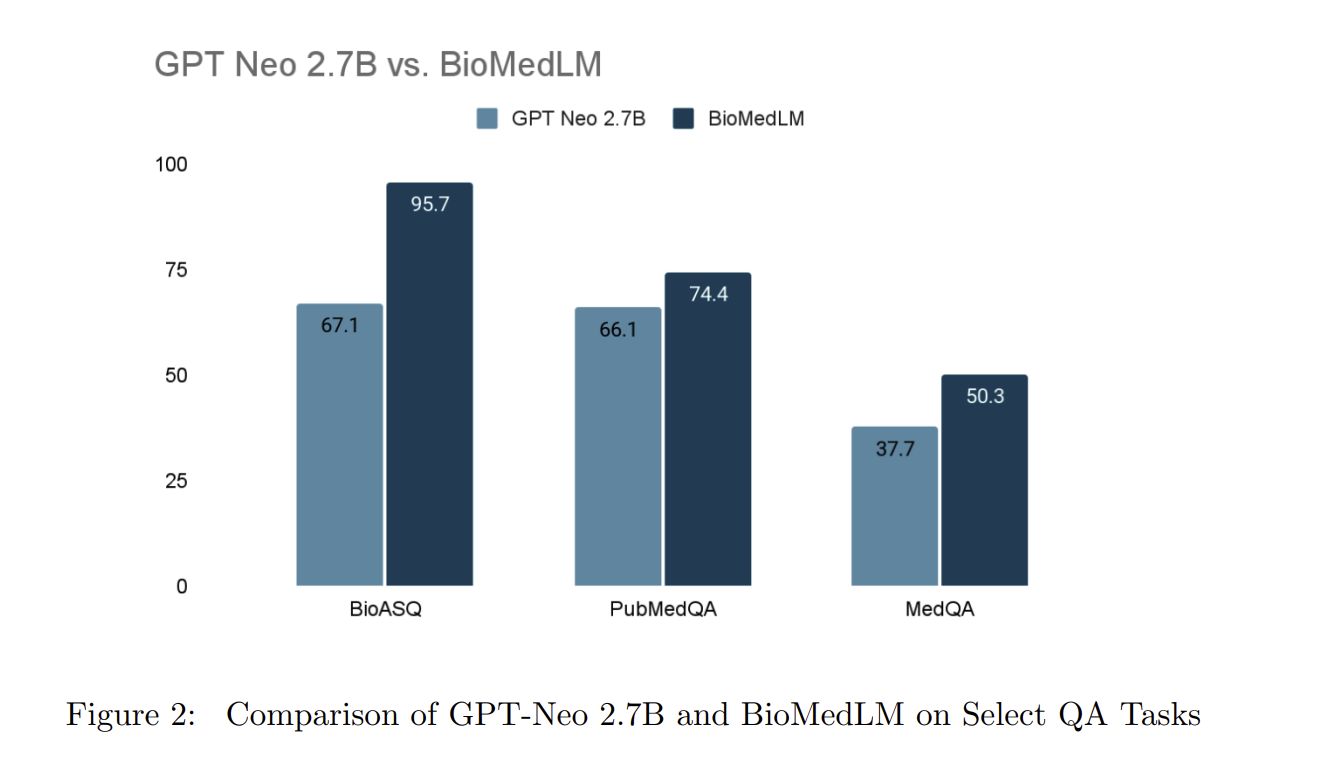

BioMedLM: A 2.7B Parameter Language Model Trained On Biomedical Text

- An open source GPT-style model trained exclusively on PubMed abstracts and full articles, designed to perform well on biomedical NLP tasks while removing the constraints of using much larger, closed source models like GPT-4.

- Paper

- Model

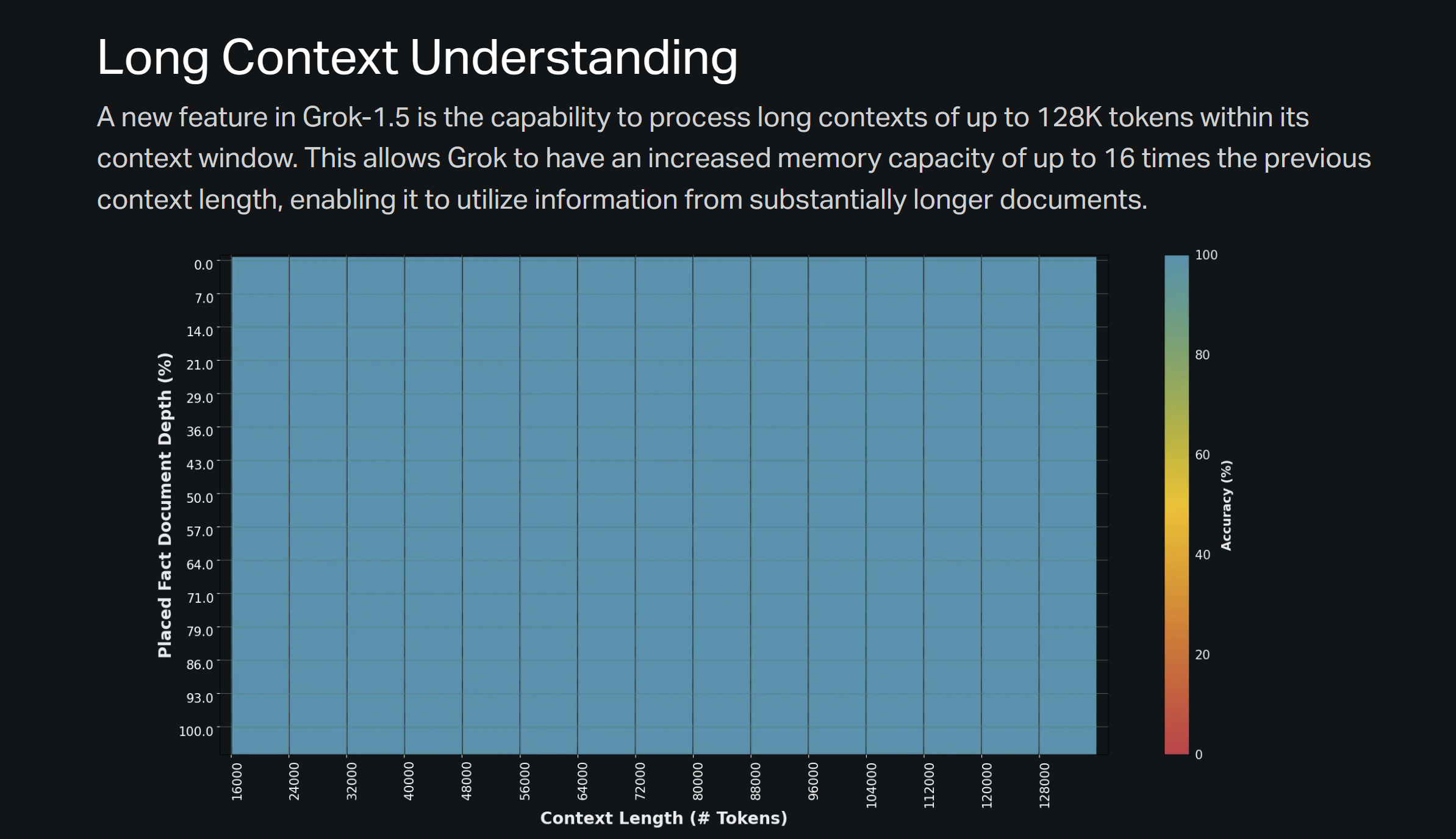

Grok 1.5 released

- An improvement on Grok-1, Grok-1.5 is xAI’s latest model capable of long context understanding and advanced reasoning.

- Announcement

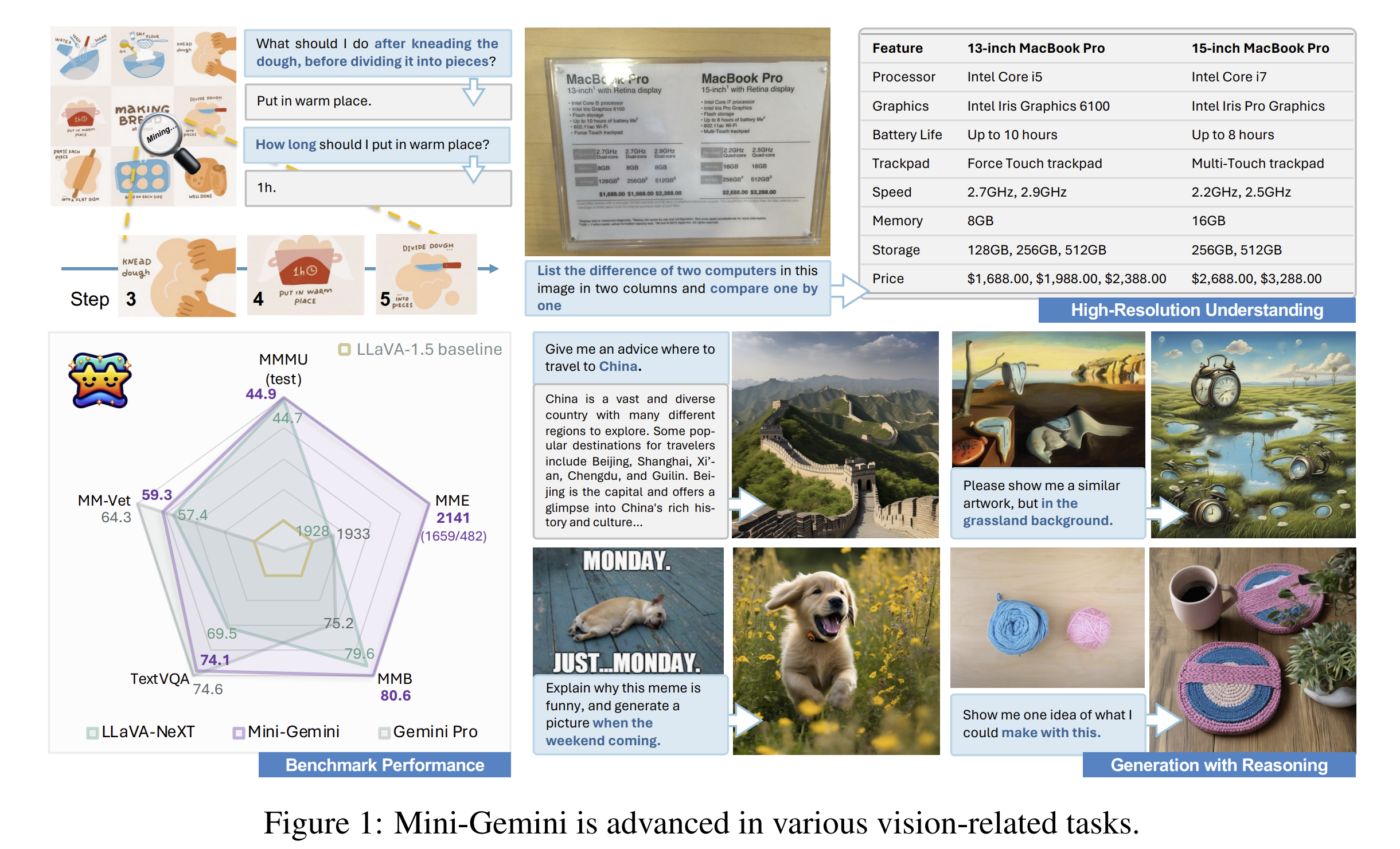

Mini-Gemini: Mining the Potential of Multi-modality Vision Language Models

- The authors introduce Mini-Gemini, which narrows the performance gap between open-source VLMs and models like GPT-4 and Gemini, through mining 3 aspects of VLMs (high-resolution visual tokens, high-quality data, and VLM-guided generation).

- Paper

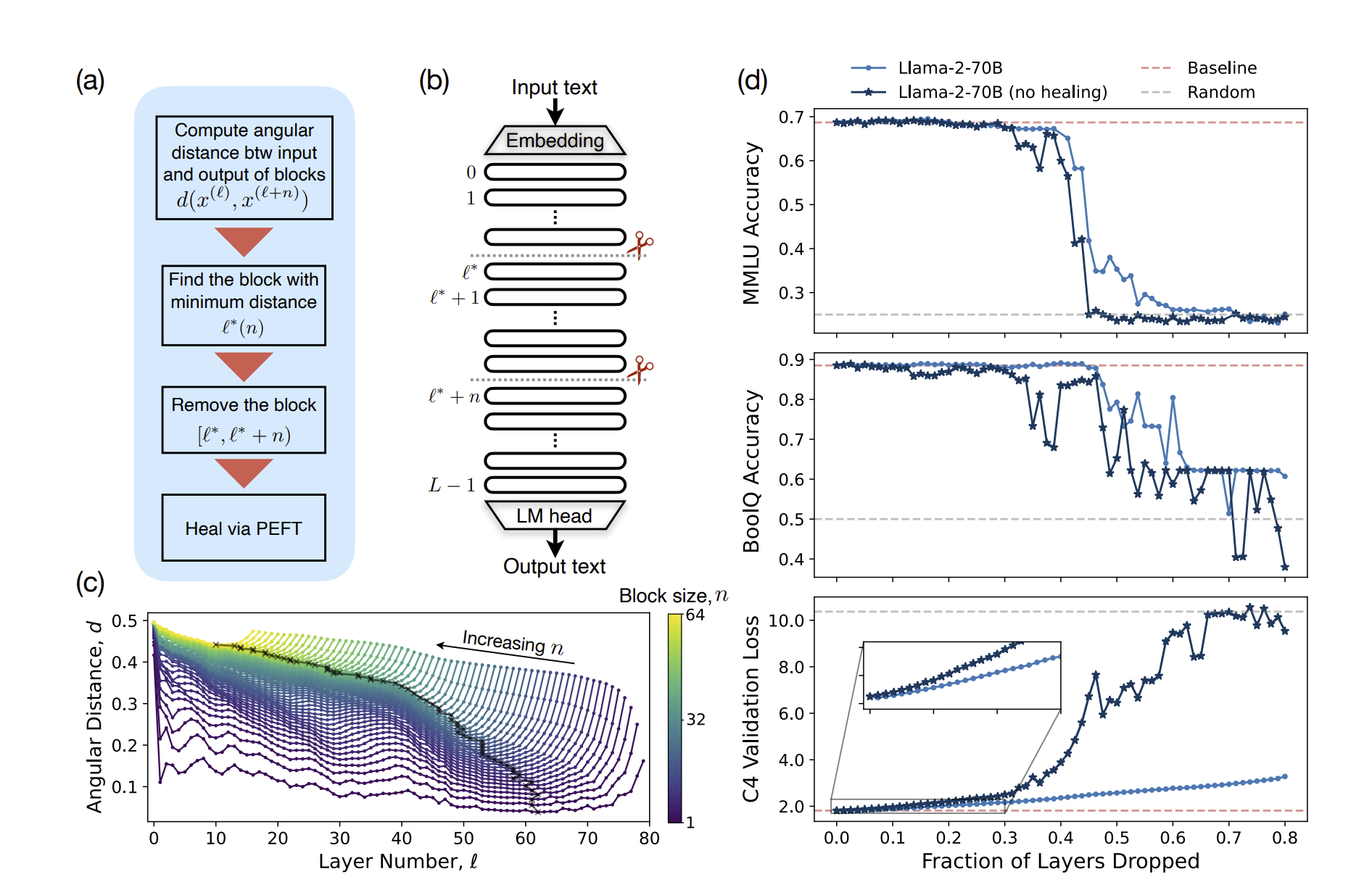

The Unreasonable Ineffectiveness of the Deeper Layers

- The authors remove up to half the layers of open-weight pretrained LLMs, and find that performance is not severely degraded. This means that current pretraining methods are either not properly utilizing deeper layer parameters, or significant important information is stored in shallow layers.

- Paper

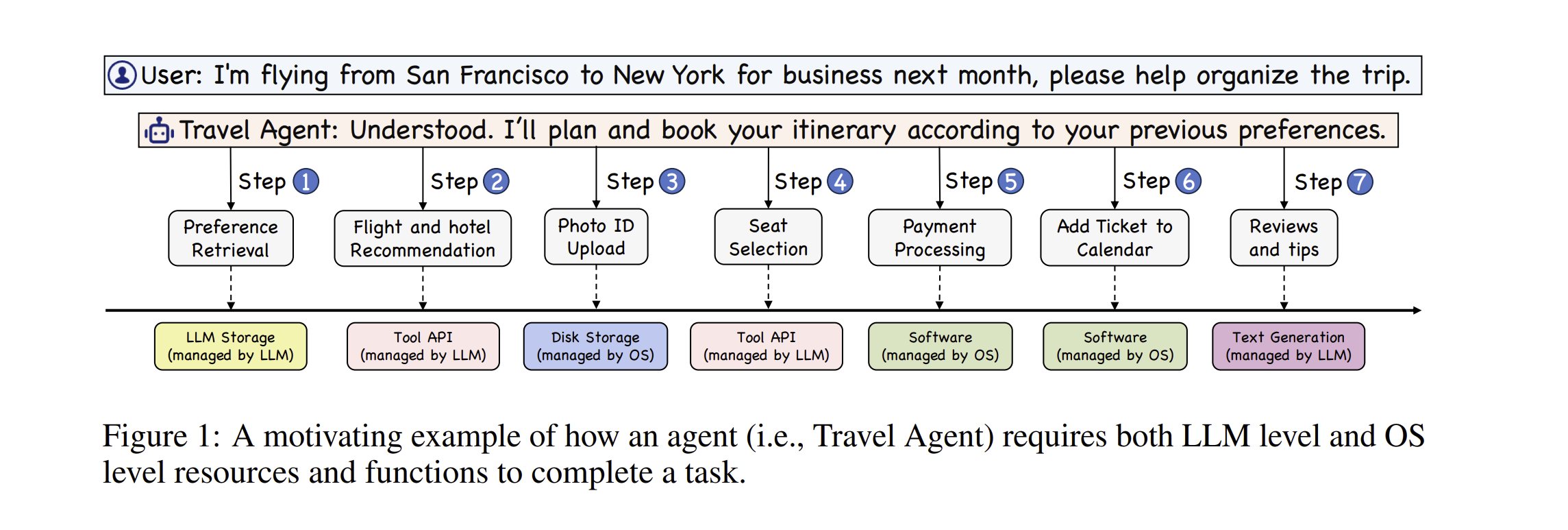

LLM Agent Operating System

- AIOS introduces an operating system specifically designed for large language model agents, optimizing resource allocation and facilitating efficient interaction among heterogeneous agents to improve their performance and address scalability challenges

- [Paper] (https://arxiv.org/abs/2403.16971)

- Project

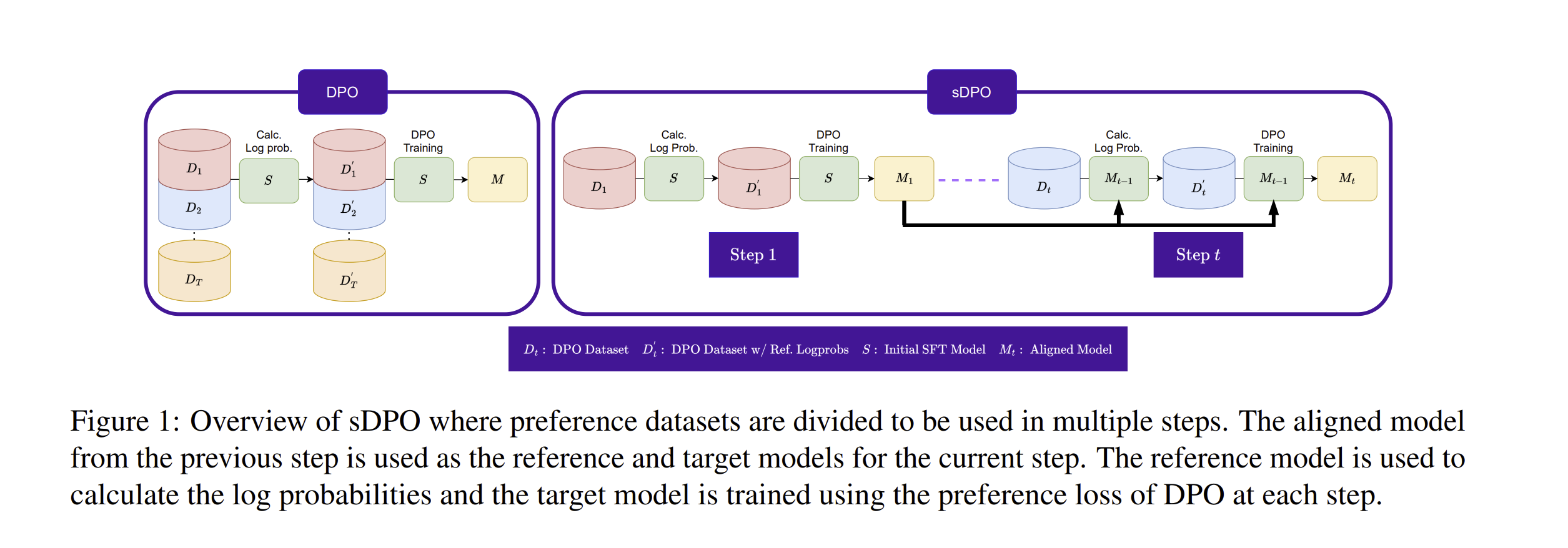

sDPO: Don’t Use Your Data All at Once

- An extension of the recently popularized direct preference optimization (DPO) for alignment tuning.

- Paper

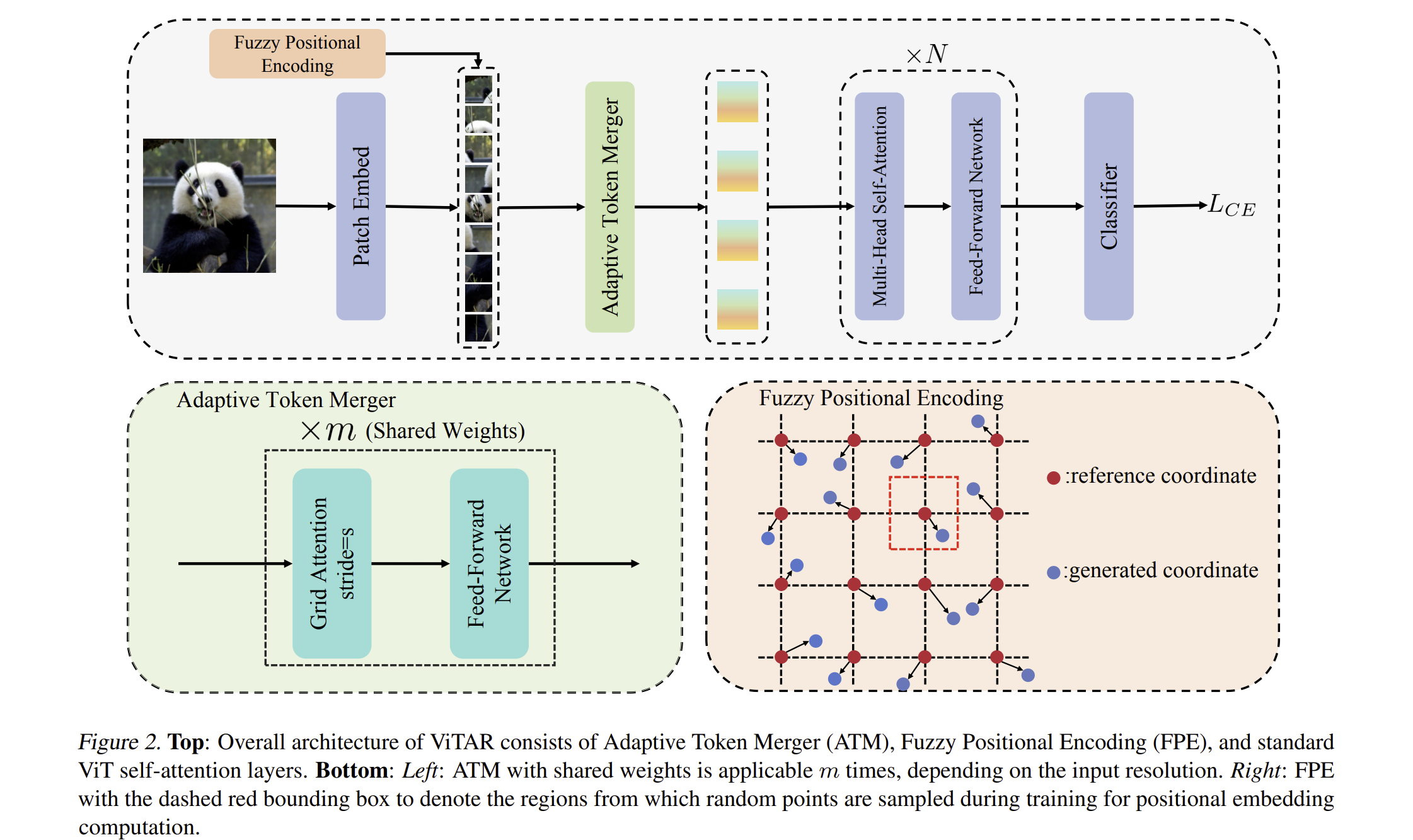

ViTAR: Vision Transformer with Any Resolution

- ViTAR enhances Vision Transformers’ adaptability to various resolutions through a dynamic resolution adjustment module and fuzzy positional encoding, achieving high accuracy across a range of resolutions while reducing computational costs.

- Paper

Stay up to date

Interested in future weekly updates? Stay up to date by joining our Slack Community!