How to Build an Enterprise Deep Learning Platform, Part One

May 12, 2020

Parts two and three of the series are available now!

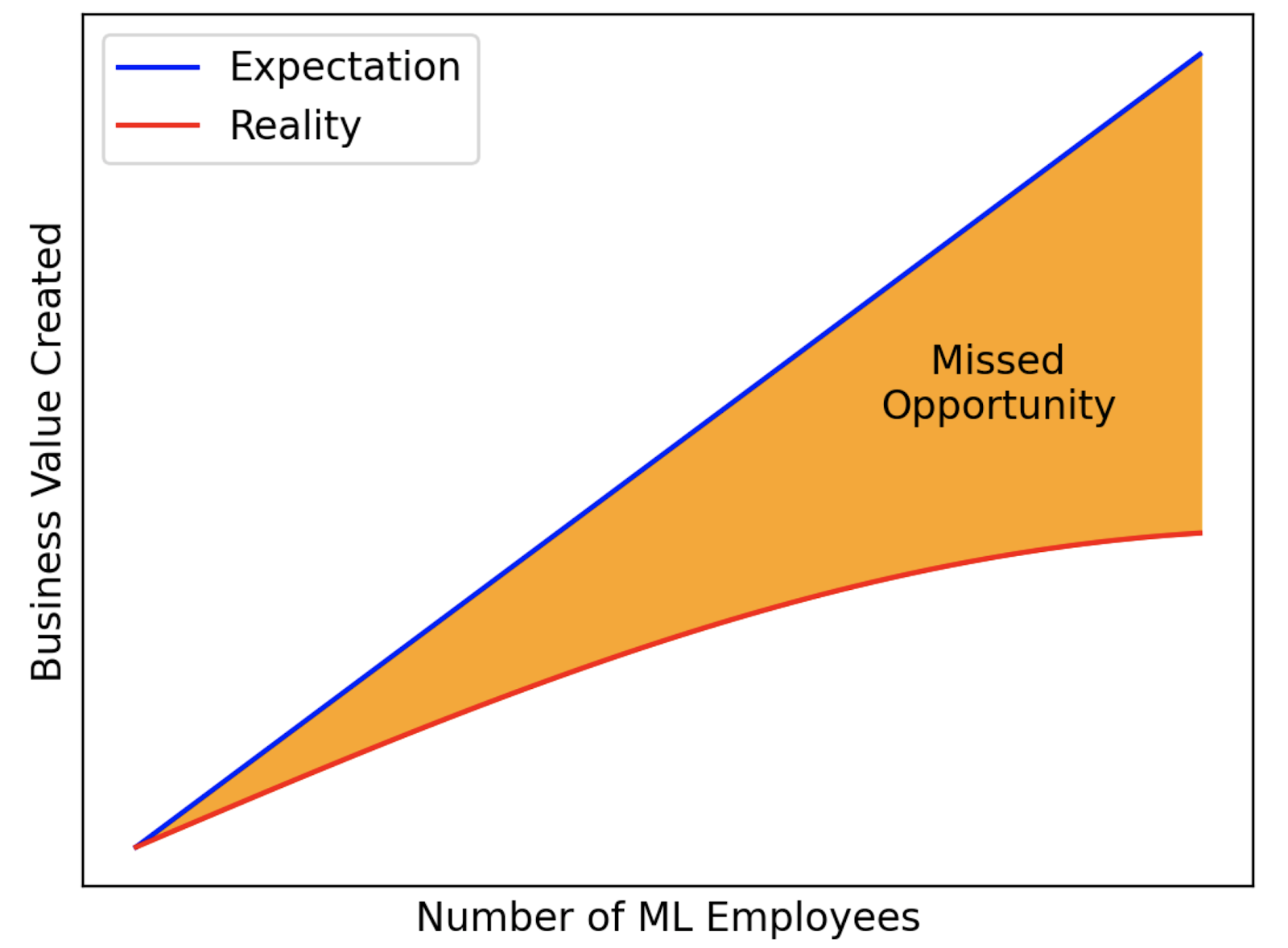

There is an incredibly common story playing out in countless enterprises in 2020. It sounds something like this:

- Enterprise realizes that deep learning presents huge opportunities: new revenue potential, product ideas, cost reductions, etc.

- Enterprise starts a small team (3-5) to research deep learning applications

- Small team succeeds with an initial use case and demonstrates $1 Million in potential business value

- Enterprise tries to scale ML team 5-10x hoping for $5-10 Million in business value

- Scaled team faces all sorts of scaling issues, particularly around managing data, collaboration, sharing compute resources, and deploying models

- Scaled team struggles to put any models into production resulting in delays, large expenses, and returns that don’t match the promise of the initial proof of concept

At this point, enterprises realize something is broken and attempt to improve their team’s ability to scale. For a surprising number of organizations, this goes something like:

- Read Uber’s Michelangelo Blog Post

- Decide to build a Machine Learning Platform

Building a machine learning platform is no easy endeavor though — the vast majority of companies that want to make money with deep learning aren’t software companies that have the time, money, or expertise to build their own platform from scratch. As such, most companies turn to buying software or using open source solutions to put together their platform.

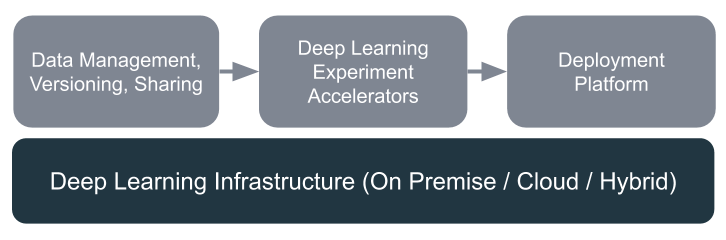

What You Need in a Deep Learning Platform

To understand what pieces are needed for an effective deep learning platform, you need to understand what was slowing down the big ML team you just scaled up. Some really common patterns emerge:

- Deep learning infrastructure is complicated! Between storing massive datasets, needing GPUs for computation, and provisioning hardware for deployment, managing the infrastructure needed for deep learning can be really difficult. We need to keep machine learning scientists from getting bogged down in infrastructure minutiae.

- Datasets used for deep learning are unwieldy. Whether your dataset is millions of labeled images or billions of sentence pairs, storing, sharing, and versioning this data can be difficult. You need good tools so people can work together on datasets.

- Training models can be very time consuming! As model complexity grows, your ML team will spend way more time figuring out how to distribute training and tune hyperparameters, and way less time churning out new models. We need tools to automate these low-level tasks for your team.

- Deploying models to production can be really tricky. Teams in a wide range of enterprises report this taking up to six months per model! Tools that automate model deployment and monitoring will hugely accelerate your team.

- We need a new set of tools to collaborate on machine learning. The experiment-driven nature of data science is fundamentally different from software engineering; as such, the tools and methods out there to manage software engineering projects (Git, testing frameworks, Agile, …) are not sufficient for collaborating on model building.

With these goals in mind, an enterprise can begin identifying tools to fill these gaps and accelerate their deep learning team. Unfortunately, even using software other companies built can prove difficult. There are dozens of companies in the ML software space and figuring out what all of their offerings do, let alone which companies do it best, can be really hard. How can you tell the difference between ten different products that all claim to be ML platforms?

In the second part of this three-part series, we will discuss the popular open source tools that can comprise an end-to-end deep learning platform. Part three will provide a concrete example of an open source deep learning platform in action.